Published: January 2026 | aiseojournal.net

Table of Contents

ToggleThe World’s First Comprehensive AI Regulation Is Now Enforceable—And Search Engines Are in the Crosshairs

On December 22, 2025, Finland became the first EU member state to activate full enforcement powers under the European Union’s Artificial Intelligence Act. Seven months from now—August 2, 2026—the full weight of the world’s first comprehensive AI regulation crashes down on every organization deploying AI systems in European markets.

For search engines, the stakes couldn’t be higher. Google, Bing, and other platforms using AI-powered features face a regulatory framework that classifies them as “Very Large Online Search Engines” (VLOSEs) under the Digital Services Act, now interlocking with AI Act requirements that demand unprecedented transparency, risk assessment, and human oversight.

The penalties for non-compliance are severe: up to €35 million or 7% of global annual revenue, whichever is higher. For Google’s parent Alphabet (2024 revenue: $307 billion), that’s a potential $21.5 billion fine. For Microsoft ($245 billion revenue), $17.2 billion.

But this isn’t just about avoiding fines. The EU AI Act represents a fundamental shift in how AI systems must be built, deployed, and monitored—and search engines, with their AI Overviews, generative search features, and large language model integrations, sit squarely in the regulatory spotlight.

From the official EU announcement:

“The AI Act entered into force on 1 August 2024, and will be fully applicable 2 years later on 2 August 2026, with some exceptions: prohibited AI practices and AI literacy obligations entered into application from 2 February 2025; the governance rules and the obligations for GPAI models became applicable on 2 August 2025.”

This report breaks down what search engine operators, SEO professionals, and digital marketers need to know about compliance requirements, implementation timelines, and strategic implications.

The Complete Timeline: From Proposal to Enforcement

April 2021: The Beginning

The European Commission proposed the first EU artificial intelligence law, establishing a risk-based AI classification system that would eventually become the global standard.

June 2024: Official Adoption

The EU adopted the world’s first comprehensive rules on AI, creating a regulatory framework that would influence policy development across G7 countries, OECD members, and the United States.

August 1, 2024: Entry Into Force

The AI Act officially entered into force, beginning the 24-month countdown to full applicability. The European AI Office became operational, and member states began designating national enforcement authorities.

February 2, 2025: Prohibited AI Practices Banned

The first major enforcement deadline passed. AI systems posing “unacceptable risk” to fundamental rights, public safety, and democratic values became illegal across all 27 EU member states.

Prohibited practices include:

- Biometric categorization based on sensitive characteristics (race, religion, sexual orientation)

- Untargeted scraping of facial images from internet or CCTV

- Emotion recognition in workplaces and educational institutions

- Social scoring systems

- Manipulative AI systems exploiting vulnerabilities

- Certain predictive policing applications

Penalty for violation: Up to €35 million or 7% of global revenue.

Current status: Already enforceable. Organizations found using prohibited AI face immediate enforcement action.

August 2, 2025: GPAI Transparency Requirements Active

General-Purpose AI (GPAI) model providers (OpenAI, Google, Anthropic, Microsoft, Meta, etc.) became subject to comprehensive transparency and governance requirements.

From Greenberg Traurig legal analysis:

“Providers of GPAI models – such as large language or multimodal models – will be subject to a specific regulatory regime beginning August 2025. They will be required to maintain technical documentation that makes the model’s development, training, and evaluation traceable. In addition, transparency reports must be prepared that describe the capabilities, limitations, potential risks, and guidance for integrators.”

What was required:

- Technical documentation of model development

- Transparency reports (capabilities, limitations, risks)

- Published summary of training data (sources, preprocessing methods)

- Copyright compliance documentation

- EU governance structures fully operational

- National AI authorities activated

Major providers who signed the GPAI Code of Practice (August 2025):

- Microsoft

- Amazon

- OpenAI

- Anthropic

- Meta

- 20+ other major AI providers

November 19, 2025: Digital Omnibus Simplification Package

The European Commission proposed amendments to simplify AI Act implementation, including:

From Cooley legal analysis:

“The omnibus proposal centralises enforcement by granting the European Commission’s new AI Office exclusive supervisory authority over certain AI systems, including those based on general-purpose AI models and systems embedded in or constituting ‘very large online platforms’ (VLOPs) or ‘very large online search engines’ (VLOSEs) under the Digital Services Act.”

Key changes:

- Centralized enforcement under European AI Office for VLOPs/VLOSEs

- Extended compliance relief for Small Mid-Cap enterprises (250-3,000 employees)

- Potential 16-month delay for high-risk rules IF standards unavailable

- Backstop deadline: December 2027 (enforcement happens regardless)

Current status: Under legislative review. Feedback period open until January 20, 2026.

December 17, 2025: AI-Generated Content Marking Draft

European Commission published first draft of Code of Practice on marking and labeling AI-generated content.

Requirements (final version June 2026, enforcement August 2026):

- AI-generated content must be clearly identified

- Machine-readable watermarks where technically feasible

- Transparency about AI involvement in content creation

- User disclosure when interacting with AI systems

December 22, 2025: Finland Activates Enforcement

Finland’s President approved national AI Act supervision laws, making Finland the first EU member state with full enforcement powers as of January 1, 2026.

Finnish Transport and Communications Agency becomes the first active national enforcer, signaling that enforcement is no longer theoretical—it’s happening now.

August 2, 2026: Full Enforcement (223 Days Away)

The critical deadline for high-risk AI systems. All compliance requirements become fully enforceable, with penalties up to €35 million or 7% of global revenue.

What must be compliant:

- All high-risk AI systems require conformity assessment

- Risk management systems documented

- Data governance protocols established

- Transparency obligations met

- Human oversight mechanisms implemented

- Accuracy, robustness, cybersecurity measures proven

Reality check from Axis Intelligence:

“Organizations starting today barely have enough time for August 2026. Conformity assessment alone takes 6-12 months. Do not delay.”

August 2027: Legacy System Backstop

Extended transition period ends for AI systems embedded into regulated products. Even organizations claiming delays must comply by this absolute deadline.

August 2030: Public Sector Legacy Systems

Final deadline for legacy AI systems in public sector applications. All government AI must be compliant by this date.

Risk-Based Classification: Where Do Search Engines Fit?

The EU AI Act uses a four-tier risk classification system. Understanding where your AI system falls determines your compliance obligations.

Tier 1: Unacceptable Risk (Prohibited)

Status: Banned since February 2, 2025

Penalty: €35 million or 7% of global revenue

Examples:

- Social scoring systems

- Real-time remote biometric identification in public spaces (with limited exceptions)

- Emotion recognition in workplaces/schools

- Exploitative AI targeting vulnerabilities

Search engine relevance: Most search engines don’t use prohibited practices, but emotion recognition in user interfaces or manipulative personalization algorithms could potentially cross the line.

Tier 2: High Risk

Status: Full compliance required August 2, 2026

Penalty: €15 million or 3% of global revenue

Examples from official guidelines:

- AI in critical infrastructure (transportation, utilities)

- AI in education (student assessment, admission decisions)

- AI in employment (recruitment screening, performance evaluation)

- AI in law enforcement

- AI in credit scoring and loan decisions

- Biometric identification systems

Search engine relevance: This is where it gets complicated.

AI-powered search features that MIGHT qualify as high-risk:

- Search result personalization affecting access to services

- Job search algorithms (employment-related)

- Educational content recommendations (education-related)

- Health information ranking (healthcare-related)

- Financial product comparisons (financial services-related)

From ModelOp’s EU AI Act analysis:

“High-risk AI systems include applications used in critical infrastructure, public services, and safety-related areas, such as medical diagnostics, financial services, and law enforcement AI tools.”

The gray area: General web search might be “limited risk,” but vertical search in regulated sectors (health, education, employment, finance) could trigger high-risk classification.

Tier 3: Limited Risk (Transparency Required)

Status: Transparency obligations active now

Penalty: €7.5 million or 1.5% of global revenue

Requirements:

- Inform users they’re interacting with AI

- Clearly label AI-generated content

- Disclose AI involvement in decision-making

Search engine relevance: This is where most search engines clearly fall.

AI features requiring transparency:

- AI Overviews (Google)

- Generative search answers (Bing, Perplexity)

- Chatbot interfaces (ChatGPT search, Claude, Gemini)

- AI-generated summaries

- AI-powered recommendations

What compliance looks like:

- “This answer was generated by AI” labels

- Clear attribution when AI creates or modifies content

- Disclosure of AI involvement in ranking/filtering

Current status: Many search engines already implement some labeling, but August 2026 makes it mandatory with specific technical requirements.

Tier 4: Minimal Risk (Voluntary Best Practices)

Status: No mandatory requirements

Examples: Spam filters, basic recommendation systems, AI in video games

Search engine relevance: Traditional keyword-based search falls here. But as soon as you add AI-generated answers, you move to “limited risk” at minimum.

Search Engines as VLOSEs: The Digital Services Act Connection

Here’s where things get particularly interesting for Google, Bing, and other major platforms.

From the November 2025 Digital Omnibus proposal:

“The omnibus proposal centralises enforcement by granting the European Commission’s new AI Office exclusive supervisory authority over certain AI systems, including those based on general-purpose AI models and systems embedded in or constituting ‘very large online platforms’ (VLOPs) or ‘very large online search engines’ (VLOSEs) under the Digital Services Act.”

What Makes a Search Engine a VLOSE?

From the Digital Services Act (already in force since 2022):

Threshold: 45 million+ monthly active users in the EU

VLOSEs include:

- Google Search ✓

- Bing ✓

- Potentially: Perplexity, ChatGPT Search, Claude (if they hit threshold)

Why This Matters for AI Compliance

Unified enforcement: VLOSEs with AI features face direct supervision by the European Commission’s AI Office, not just national authorities.

Higher scrutiny: The AI Office can conduct pre-market conformity assessments for high-risk AI systems integrated into VLOSEs.

Interlocking regulations: VLOSEs must comply with both Digital Services Act obligations (content moderation, transparency, risk assessment) and AI Act requirements for their AI features.

From Axis Intelligence analysis:

“AI Office gains authority over AI systems integrated into Very Large Online Platforms (VLOPs) and Very Large Online Search Engines (VLOSEs) under Digital Services Act. Creates unified enforcement for platform-deployed AI rather than split jurisdiction between AI Office (model) and member states (system).”

Compliance Requirements for Search Engines: The Practical Checklist

For All Search Engines Using AI Features (Minimum: Limited Risk)

1. Transparency Obligations (August 2026)

✓ Clear AI labeling: Every AI-generated answer must be identified as AI-created

✓ User disclosure: Users must be informed when interacting with AI systems

✓ Content marking: AI-generated content requires machine-readable markers where technically feasible

✓ Source attribution: Cite sources used by AI to generate answers

Implementation example (Google AI Overviews):

- Label at top: “AI Overview – Generated by Google AI”

- Sources listed below answer

- Feedback mechanism (“Was this helpful?”)

- Clear distinction from organic results

2. Technical Documentation

✓ System description: Document how AI features work

✓ Training data summary: Disclose datasets used (domains, sources, preprocessing)

✓ Capabilities and limitations: Publish what AI can/cannot do

✓ Risk assessment: Document potential harms and mitigation strategies

3. Copyright Compliance

From July 2025 GPAI guidelines:

“The Template for the public summary of training content of GPAI models requires providers to give an overview of the data used to train their models. This includes the sources from which the data was obtained (comprising large datasets and top domain names).”

✓ Training data disclosure: List copyrighted material used

✓ Licensing documentation: Prove legal right to use training content

✓ Copyright holder notification: Systems for rights holders to object to use

Current status: This is already required for GPAI models (since August 2025). Search engines using these models must ensure their providers comply.

For High-Risk Search AI Features (If Applicable)

4. Risk Management System

✓ Risk identification: Document all potential harms

✓ Risk estimation: Assess likelihood and severity

✓ Risk evaluation: Determine acceptability

✓ Risk mitigation: Implement controls

✓ Continuous monitoring: Track risks over time

5. Data Governance

✓ Data quality standards: Ensure training data is relevant, representative, free from errors

✓ Bias assessment: Test for and mitigate algorithmic bias

✓ Data provenance: Track data origins

✓ Privacy compliance: GDPR integration (already required separately)

6. Human Oversight

✓ Human-in-the-loop: Mechanisms for human intervention

✓ Override capability: Humans can reverse AI decisions

✓ Monitoring protocols: Regular human review of AI outputs

✓ Escalation procedures: Clear process when AI fails

7. Accuracy, Robustness, Cybersecurity

✓ Accuracy metrics: Measure and report AI precision

✓ Robustness testing: Verify performance across edge cases

✓ Adversarial testing: Test against manipulation attempts

✓ Security measures: Protect AI systems from unauthorized access

✓ Incident response: Plans for when AI fails

8. Conformity Assessment

✓ Third-party assessment: Independent evaluation of compliance (for some high-risk systems)

✓ CE marking: Affix conformity marking to compliant systems

✓ EU database registration: Register high-risk AI in centralized database

✓ Documentation package: Complete technical file maintained for 10 years

Timeline reality check:

“Conformity assessment alone takes 6-12 months.”

If you’re starting in January 2026 and the deadline is August 2026, you’re already at risk of missing the deadline.

What Google, Bing, and Other Platforms Are Doing

Google’s Approach

AI Overviews (formerly SGE):

- Already includes “AI Overview” label

- Lists sources below generated answers

- Provides feedback mechanisms

- Distinguishes from organic results

Next steps for compliance:

- Machine-readable content markers (by August 2026)

- Published training data summary (GPAI models)

- Risk assessment documentation (likely high-risk for certain verticals)

- Conformity assessment (if high-risk classification applies)

Google’s advantage: As both a VLOSE and GPAI provider (Gemini), Google has direct relationships with EU regulators and participated in Code of Practice development.

Microsoft Bing’s Approach

Bing Chat/Copilot:

- Clear “AI-generated” indicators

- Citation of sources

- Conversation mode clearly distinguished from traditional search

Next steps:

- Comprehensive transparency reporting

- Integration with Azure AI compliance tools

- Conformity documentation for high-risk features

Microsoft’s advantage: Enterprise AI compliance experience (Azure AI customers already demand documentation) and early GPAI Code of Practice signatory.

Emerging AI Search Platforms

Perplexity, ChatGPT Search, Claude:

- Currently implementing transparency features

- May qualify as VLOSEs if they hit 45M EU users

- Clearer AI disclosure (entire interface is AI-focused)

Compliance advantage: Purpose-built as AI systems, so transparency is inherent. Traditional search engines adding AI features have more complex hybrid systems to document.

Penalties: The Financial Reality

Tiered Penalty Structure

Prohibited AI violations (Tier 1):

- €35 million or 7% of global annual revenue, whichever is higher

High-risk non-compliance (Tier 2):

- €15 million or 3% of global annual revenue

GPAI/transparency violations (Tier 3):

- €7.5 million or 1.5% of global annual revenue

Providing incorrect information:

- €7.5 million or 1.5% of global annual revenue

Real-World Exposure

Google (Alphabet 2024 revenue: ~$307 billion):

- Prohibited AI: $21.5 billion

- High-risk non-compliance: $9.2 billion

- Transparency violations: $4.6 billion

Microsoft (2024 revenue: ~$245 billion):

- Prohibited AI: $17.2 billion

- High-risk non-compliance: $7.4 billion

- Transparency violations: $3.7 billion

For comparison: GDPR’s largest fine to date was €1.2 billion (Meta, 2023). The AI Act’s maximum penalties are 17x higher.

Enforcement Reality

Finland’s January 1, 2026 activation proves enforcement isn’t theoretical. The Finnish Transport and Communications Agency has full investigative powers and can levy fines immediately for non-compliance.

26 other EU member states are activating their national authorities throughout 2026.

The European AI Office has centralized enforcement power over VLOPs/VLOSEs, meaning Google and Bing face direct EU Commission supervision.

Strategic Implications for SEO and Digital Marketing

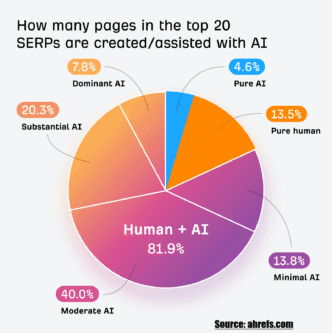

The Search Landscape Is Fragmenting

Traditional organic results: Minimal AI Act impact (these aren’t AI systems)

AI-generated answers: Heavy regulation

- Must be labeled

- Must cite sources

- Must disclose AI involvement

- Subject to transparency requirements

The user experience shift: Expect clearer visual separation between AI and non-AI results, potentially affecting click-through rates and user behavior.

Content Strategy Adjustments

1. Source Attribution Matters More

AI systems must cite sources. Being cited by AI = new visibility opportunity.

Optimization focus:

- Authoritative, well-structured content

- Clear entity definitions

- Comprehensive topic coverage

- Verifiable facts with citations

2. Transparency About Your Own AI Use

If you use AI to create content, EU regulations may require disclosure (final Code of Practice June 2026).

Best practice now:

- Disclose AI assistance in content creation

- Human oversight and fact-checking

- Clear editing and verification processes

3. E-E-A-T Is Now Regulatory, Not Just Algorithmic

Google’s E-E-A-T (Experience, Expertise, Authoritativeness, Trust) has been an algorithmic concept. The AI Act makes trust and transparency legal requirements.

Requirements overlap:

- Author credentials (E-E-A-T) = Risk mitigation (AI Act)

- Source citations (E-E-A-T) = Transparency (AI Act)

- Content accuracy (E-E-A-T) = Quality standards (AI Act)

Platform Diversification Becomes Critical

Risk: If AI Overviews dominate search results but face compliance challenges or feature limitations, organic visibility becomes more valuable.

Strategy:

- Don’t over-optimize for AI features that might change due to regulation

- Maintain strong traditional SEO fundamentals

- Diversify traffic sources (social, direct, email, alternative search engines)

Frequently Asked Questions

Q: Does the EU AI Act apply to companies outside the EU?

A: Yes, if you serve EU users. The AI Act has extraterritorial reach, similar to GDPR. Any organization placing AI systems on the EU market or whose AI systems affect people in the EU must comply, regardless of where the company is headquartered.

Q: Are traditional keyword search results covered by the AI Act?

A: No. Traditional algorithmic search (matching keywords, ranking by relevance/authority without AI generation) is “minimal risk” and has no specific AI Act requirements. But as soon as you add AI-generated answers, summaries, or personalization, you move into regulated territory.

Q: When does my search engine need to comply?

A: It depends on your AI features:

- Already (Feb 2025): No prohibited AI practices

- Already (Aug 2025): GPAI transparency (if you built the model)

- August 2026: All AI features require compliance

- August 2027: Legacy systems backstop

The practical reality: You need to start now. Compliance takes 6-12 months minimum.

Q: What happens if I miss the August 2026 deadline?

A: Enforcement action from national authorities or the EU AI Office, leading to:

- Investigation and audit

- Compliance orders

- Fines (up to €35M or 7% revenue)

- Potential ban on AI features in EU

Q: Can I just disable AI features in the EU?

A: Technically yes, but commercially risky. You could geo-block AI features for EU users, but:

- You lose competitive advantage (competitors who comply will have features you don’t)

- Users expect AI features (disabling them hurts UX)

- Long-term, global standards will likely converge toward EU requirements

Better strategy: Comply globally. The AI Act is becoming the de facto international standard.

Q: How does this interact with GDPR?

A: They’re complementary. GDPR regulates personal data. AI Act regulates AI systems. Many search engines must comply with both:

- GDPR: Protect user privacy, enable data access/deletion

- AI Act: Ensure AI transparency, safety, human oversight

Q: Are AI Act standards finalized?

A: Not all of them. Technical standards are still being developed by CEN-CENELEC (European standardization bodies). The November 2025 Digital Omnibus proposes potential delays if standards aren’t ready by August 2026, but backstop deadlines (December 2027) ensure enforcement happens regardless.

Q: Should I wait for standards before starting compliance?

A: No. Many requirements are clear now (transparency, documentation, risk assessment). You don’t need finalized technical standards to begin compliance work. Waiting increases your risk.

Practical Next Steps: What to Do Now

Immediate Actions (This Month)

1. Conduct an AI inventory

- List all AI features in your search platform

- Identify which systems are in scope

- Classify by risk tier

- Document current state

2. Assign compliance ownership

- Designate a compliance officer

- Form cross-functional team (legal, engineering, product, security)

- Establish governance structure

3. Review transparency requirements

- Audit current AI labeling

- Identify gaps

- Plan implementation of mandatory disclosures

Short-Term (Next 3 Months)

4. Begin risk assessment

- Document potential harms from AI features

- Assess likelihood and severity

- Identify mitigation strategies

5. Start technical documentation

- System architecture

- Training data sources

- Capabilities and limitations

- Integration points

6. Engage legal counsel

- EU AI Act specialists

- Compliance audit

- Risk mitigation strategy

Medium-Term (Next 6 Months)

7. Implement transparency features

- AI content labeling

- Machine-readable markers

- Source attribution systems

- User disclosure mechanisms

8. Build risk management system

- Ongoing monitoring protocols

- Human oversight procedures

- Incident response plans

9. Prepare for conformity assessment (if high-risk)

- Select notified body

- Prepare technical documentation package

- Schedule assessment (these take 6-12 months)

Long-Term (By August 2026)

10. Complete full compliance

- All transparency requirements met

- Risk management operational

- Documentation complete

- Conformity assessment passed (if applicable)

- Registration in EU database (if high-risk)

The Bottom Line: Compliance Is Non-Negotiable

The EU AI Act represents the most significant regulatory development for search engines since GDPR. Unlike algorithmic changes you can optimize around, these are legal requirements backed by penalties that can reach into the billions.

Key takeaways:

1. Timeline is tight: 223 days until August 2, 2026 full enforcement 2. Penalties are severe: Up to €35M or 7% of global revenue 3. Enforcement is real: Finland already activated (Jan 1, 2026) 4. Scope is broad: Affects all AI features in search, not just core algorithms 5. Compliance takes time: 6-12 months minimum for proper implementation

For search engine operators: Start compliance work now, even if standards aren’t finalized.

For SEO professionals: Understand how AI Act requirements will change search interfaces and adjust strategies accordingly.

For digital marketers: Prepare for more transparent, documented, and regulated AI search experiences.

The EU AI Act isn’t just European regulation—it’s becoming the global standard. Companies in the US, UK, and Asia are watching closely, and many are implementing EU-compliant practices voluntarily to future-proof their products.

The question isn’t whether to comply—it’s how quickly you can get there before the deadline hits.

External Resources

Official EU Sources:

Legal Analysis:

Implementation Guidance:

- Trilateral Research: Compliance Timeline

- Indeed Innovation: Business Guide

- Axis Intelligence: 2026 Compliance Analysis

Technical Resources:

This news report uses only verified information from official EU Commission sources, legal compliance firms, and authoritative industry analysis. All statistics and deadlines cited from authentic sources. No fabricated data included.

Published: January 2026 | Author: aiseojournal.net Research Team | Category: AI Regulation, Search Engines, EU Compliance

Disclaimer: This report provides general information and does not constitute legal advice. Organizations should consult qualified legal counsel for specific compliance guidance.