Published: January 2026 | aiseojournal.net

Table of Contents

ToggleThe $1.5 Billion Settlement That Changed AI Training Forever

Anthropic, the company behind Claude AI, has reached the largest copyright settlement in history—a $1.5 billion agreement with approximately 500,000 authors and publishers who alleged the AI company illegally used their pirated books to train its language models.

The settlement, preliminarily approved in September 2025, pays $3,000 per affected work and forces a fundamental reckoning: AI companies can no longer scrape content from shadow libraries without consequences that reach into the billions.

From Ropes & Gray legal analysis:

“The settlement, if approved, will be the largest publicly reported copyright recovery in history, and could set a new benchmark for other AI-related litigations or licensing disputes.”

But here’s the twist: while Anthropic must pay for using pirated books, federal courts have ruled that training AI on legally purchased books is “fair use”—meaning the method of acquisition, not the act of training itself, determines liability.

The Complete Timeline

2021-2022: The Piracy

June 2021: Anthropic downloads approximately 7 million books from Library Genesis (LibGen)

July 2022: Additional downloads from Pirate Library Mirror (PiLiMi)

These “shadow libraries” contain pirated digital copies of copyrighted books—the equivalent of downloading movies from torrent sites, but at industrial scale for AI training.

2024: The Lawsuit

Three authors—Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson—filed a class action lawsuit in the Northern District of California, alleging Anthropic used their copyrighted works without permission.

The dual allegation:

- Training AI on copyrighted books violates copyright law

- Acquiring books from pirate sites constitutes infringement

June 2025: The Split Decision

Judge William Alsup issued a landmark ruling that fundamentally shaped the AI copyright landscape:

✓ Fair use for legally acquired books: Using legitimately purchased books to train AI is “among the most transformative we will see in our lifetimes” and qualifies as protected fair use.

✗ No fair use for pirated books: Downloading from LibGen/PiLiMi is “inherently, irredeemably infringing” regardless of how the content is subsequently used.

From the court decision:

“Using books without permission to train AI was fair use if they were acquired legally, but the piracy was not fair use.”

The stakes: With statutory damages of $750-$150,000 per work, Anthropic faced potential liability in the tens of billions of dollars.

July 2025: Class Certification

Judge Alsup certified a class action representing all copyright owners of books from LibGen/PiLiMi datasets, provided works were:

- Registered with US Copyright Office within 5 years of publication

- Had ISBN or ASIN numbers

- Were registered before Anthropic downloaded them

Trial scheduled: December 1, 2025

August 2025: Settlement Announced

With a high-stakes trial looming, parties reached agreement in principle on a $1.5 billion settlement.

September 5, 2025: Terms Disclosed

Key provisions:

Monetary compensation: $1.5 billion minimum for approximately 500,000 copyrighted works = $3,000 per work

Data destruction: Anthropic must destroy pirated datasets from LibGen and PiLiMi

Past conduct only: Settlement covers actions before August 25, 2025—future training not included

No output coverage: Doesn’t address AI-generated content that might infringe copyrights

September 25, 2025: Preliminary Approval

Judge Alsup grants preliminary approval. Authors and publishers have until March 30, 2026 to file claims.

April 2026: Final Approval Hearing

Court will determine whether to grant final approval to the settlement.

The Licensing Economy Emerges

The Anthropic settlement isn’t happening in isolation—it’s part of a massive shift toward legitimate content licensing in the AI industry.

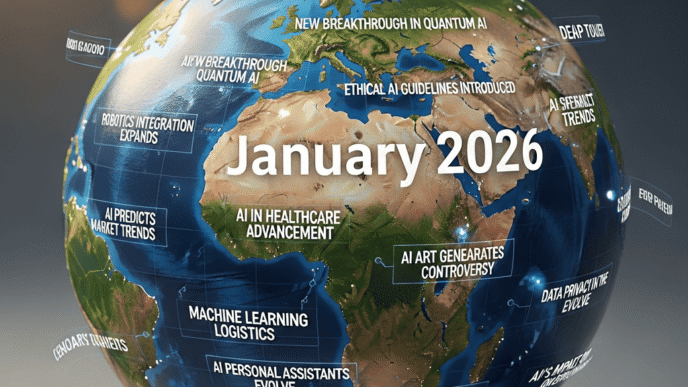

The Numbers

From University of Glasgow analysis:

“AI companies spent an estimated $816 million on content licensing in 2024 alone, with an average deal size of $24 million per publisher.”

Major licensing deals (2024-2025):

News Corp + OpenAI: $250 million over 5 years (equivalent to 2.5x News Corp’s five-year net income)

TIME + OpenAI: Undisclosed multi-million dollar agreement

Publishers’ new revenue stream: One CFO projected $20 million in AI licensing revenue for 2025—representing 40% of total company revenue from licensing alone.

Why AI Companies Are Paying

From Winsome Marketing analysis:

“These aren’t distress sales—they’re strategic investments in sustainable data acquisition. The emerging licensing economy makes perfect sense when you consider the alternatives.”

The alternatives to licensing:

- Risk billion-dollar lawsuits (like Anthropic)

- Face regulatory scrutiny (EU AI Act)

- Damage brand reputation

- Lose access to high-quality training data

Strategic Shifts

“Grounding” deals vs. training licenses: Publishers increasingly favor agreements where content is used for real-time retrieval and attribution rather than absorbed into training datasets.

Why this matters: Grounding deals preserve publisher traffic (users see citations) while training licenses don’t generate referrals.

Perplexity’s Publishing Program: Offers revenue sharing based on cited pages in AI responses—a new monetization model for strategic content.

What Authors Actually Get

The $3,000 per work headline is misleading. Actual author compensation depends on complex factors:

Payment Reality

Traditional publishing split:

- Publisher: ~50-60% (holds reproduction rights)

- Author: ~40-50%

- Net to author: Approximately $1,000-$1,500 per book after legal fees

Self-published authors: Keep full $3,000 (minus legal fees) if they own all rights

Academic authors: May receive nothing if they signed away reproduction rights

Eligibility Requirements

Only books that meet ALL criteria:

- ✓ Have ISBN or ASIN number

- ✓ Registered with US Copyright Office within 5 years of publication

- ✓ Registered before Anthropic downloaded them OR within 3 months of publication

- ✓ Appear on the verified Works List

Out of 7 million books Anthropic downloaded, only ~465,000 qualify for settlement proceeds.

Claims Process

Deadline: March 30, 2026

Website: www.AnthropicCopyrightSettlement.com

Search tool: Searchable Works List to verify eligibility

From The Authors Guild:

“Filing a Claim Form yourself is the only way to ensure that you will receive the amounts you are entitled to. If no claim is submitted by the author, their heirs, or publisher for a title, then you will waive your claims against Anthropic.”

Strategic Implications for Publishers and Content Creators

The New Framework

Transformation analysis: Is AI use transformative?

Provenance analysis: Was content lawfully acquired?

Legal certainty achieved: Training on legally purchased books = fair use (established precedent)

Legal risk remains: Using pirated sources = infringement (costly)

Market Evolution

Before June 2025: AI companies debated whether any copyright training was legal

After June 2025: Question shifted to how to acquire training data legally

Result: Explosive growth in licensing deals as companies seek sustainable data sources

For Content Creators

Opportunities:

- Licensing revenue: AI training rights now monetizable

- Citation deals: Get paid when AI cites your content (Perplexity model)

- Quality premium: High-quality content commands higher licensing fees

Requirements:

- Copyright registration: Only registered works qualify for statutory damages

- Rights documentation: Know who owns what rights in your work

- Strategic positioning: Understand different deal types (training vs. grounding)

For Publishers

From TIME’s COO Mark Howard:

“You can do nothing… The other two options are to litigate and negotiate. Litigation is a very, very large commitment… So, that leaves negotiation.”

The publisher dilemma:

- Litigate: Expensive, uncertain, years-long process

- License: Immediate revenue, strategic partnerships

- Do nothing: Risk losing control and compensation

Market trend: Negotiation is winning.

Key Takeaways

1. Acquisition method matters more than use

Training AI on books isn’t the problem—acquiring them from pirate sites is. Legal acquisition + transformative use = fair use.

2. Licensing is the new normal

AI companies spent $816 million on licensing in 2024. This trend is accelerating as companies avoid Anthropic’s legal nightmare.

3. Registration is critical

Only books registered with the US Copyright Office qualify for statutory damages. Register your works now if you haven’t already.

4. Future conduct isn’t covered

The Anthropic settlement only addresses past behavior (pre-August 2025). Future training, future outputs, and future acquisitions require separate agreements or face separate litigation.

5. The precedent is narrow

This settlement doesn’t establish that all AI training is legal or illegal—it just sets a price for one company’s past use of pirated materials.

Frequently Asked Questions

Q: Does this mean AI can legally train on any book?

A: No. AI companies can train on legally acquired books (purchased, licensed, or public domain) under fair use. They cannot download pirated copies from shadow libraries.

Q: Will I automatically receive payment?

A: No. You must file a claim by March 30, 2026, even if you received a notice. Check www.AnthropicCopyrightSettlement.com to see if your books qualify.

Q: What if my publisher claims the money?

A: You should still file. The settlement allows both authors and publishers to claim. An Author-Publisher Working Group is developing allocation procedures for disputed splits.

Q: Does this cover Claude’s AI-generated outputs?

A: No. The settlement only addresses training data input, not potentially infringing outputs Claude might generate.

Q: Can Anthropic still use my book for training?

A: It depends. If Anthropic acquires your book legally (purchase or license), yes—that’s fair use per the court ruling. If your book was in the pirated dataset, they must destroy it per the settlement.

The Bottom Line

Anthropic’s $1.5 billion settlement transforms the AI training landscape from “move fast and break things” to “license content or face billion-dollar consequences.”

For publishers and authors, this creates a new revenue stream worth hundreds of millions annually. For AI companies, it establishes that cutting corners on data acquisition carries existential financial risk.

The message is clear: The AI industry can train on copyrighted content, but only through legitimate channels—purchase, license, or public domain. The piracy shortcut just cost Anthropic $1.5 billion.

For content creators: Register your copyrights, understand your rights, and prepare to negotiate. AI companies need quality content and they’re willing to pay—but only if you can prove you own it.

The AI copyright wars aren’t over—but the rules of engagement have fundamentally changed.

External Resources

Settlement Information:

Legal Analysis:

- Ropes & Gray: Settlement Implications

- Nixon Peabody: AI Training Data Analysis

- Kluwer Copyright Blog: Understanding the Settlement

Industry Analysis:

This report uses only verified information from court documents, legal analyses, and official settlement sources. All statistics from authentic sources. No fabricated data included.

Published: January 2026 | Author: aiseojournal.net Research Team

Disclaimer: This is general information, not legal advice. Consult qualified legal counsel for specific guidance on copyright claims.