Your website has uninvited guests. Thousands of them. And they’re not going away.

AI agents are crawling, scraping, querying, and interacting with your content 24/7. Some are legitimate business partners. Some are search engines. Some are shopping assistants helping customers. And some? Well, some are just digital freeloaders consuming your expensive-to-produce content without permission, compensation, or even identification.

AI agent authentication isn’t about keeping all bots out—it’s about knowing who’s at your door, deciding who gets in, and controlling what they can access once inside. And if you’re still treating all automated traffic the same way, you’re either blocking valuable partners or hemorrhaging content to competitors.

Table of Contents

Toggle

What Is AI Agent Authentication and Why Does It Matter?

AI agent authentication is the process of verifying the identity of autonomous systems accessing your digital properties and granting appropriate permissions based on their identity and purpose.

It’s bouncers for bots—checking IDs, maintaining guest lists, and enforcing access rules without human intervention. Unlike human authentication (usernames, passwords, MFA), agent access control typically uses API keys, OAuth tokens, TLS certificates, or cryptographic signatures.

The stakes are high. According to Gartner’s 2024 API security report, 83% of enterprise web traffic will come from automated agents by 2026, yet only 37% of organizations have comprehensive agent authentication strategies in place.

Without proper bot authentication, you’re either blocking everyone (losing legitimate business opportunities) or blocking no one (getting exploited by aggressive scrapers).

The Problem With Treating All Bots the Same

Traditional approaches fall into two dysfunctional camps: ban everything or allow everything.

Ban everything: You block all automated traffic with aggressive CAPTCHA, bot detection, and IP blocking. Congratulations—you’ve also blocked Google’s crawler, legitimate API consumers, partner integrations, accessibility tools, and the shopping agents trying to recommend your products to customers.

Allow everything: You let all agents freely access your content. Great for SEO and discoverability! Also great for competitors scraping your pricing, content farms stealing your articles, and malicious actors probing for vulnerabilities.

| Undifferentiated Approach | Authenticated Agent Strategy |

|---|---|

| All bots treated equally | Identity-based access tiers |

| Binary access (all or nothing) | Granular permissions by purpose |

| Reactive blocking after abuse | Proactive trust establishment |

| No usage attribution | Complete audit trails |

| Static rules | Dynamic, behavior-based policies |

A SEMrush study from late 2024 found that 64% of “good” bot traffic (search engines, monitoring services, legitimate agents) gets inadvertently blocked by overly aggressive bot protection, costing sites an average of 18% potential revenue.

Core Principles of AI Agent Access Management

Should Every Agent Have Unique Credentials?

Absolutely—anonymous access should be the exception, not the rule.

Issue unique API keys, OAuth client credentials, or cryptographic certificates to identified agents. This enables tracking, rate limiting, permission scoping, and abuse prevention at the individual agent level.

Your public Googlebot doesn’t need credentials—you want it crawling freely. But commercial agents, partner integrations, research tools, and any bot performing actions beyond basic crawling should identify themselves.

Pro Tip: “Implement a self-service API key registration portal. Let agent developers create accounts, describe their use case, and receive rate-limited free tier access immediately. This balances accessibility with accountability.” — Kin Lane, API Evangelist

Anonymous agents get minimal access—maybe read-only to public content with strict rate limits. Authenticated agents get permissions appropriate to their proven use case.

How Should Permissions Be Scoped for Different Agent Types?

Based on identity, purpose, and demonstrated trustworthiness.

Search engine crawlers: Full read access to public content, no authentication required, identified by validated user-agent strings and IP ranges.

Commercial API partners: Authenticated access with usage-based rate limits, permission scoping by endpoint and operation, audit logging of all activity.

Internal automation: Full access with service account credentials, strict audit logging, privileged operations allowed.

Research/academic agents: Authenticated access with academic credentials, reasonable rate limits, requirement to cite sources and respect robots.txt.

Unknown/new agents: Severely limited access until they establish identity and demonstrate legitimate purpose.

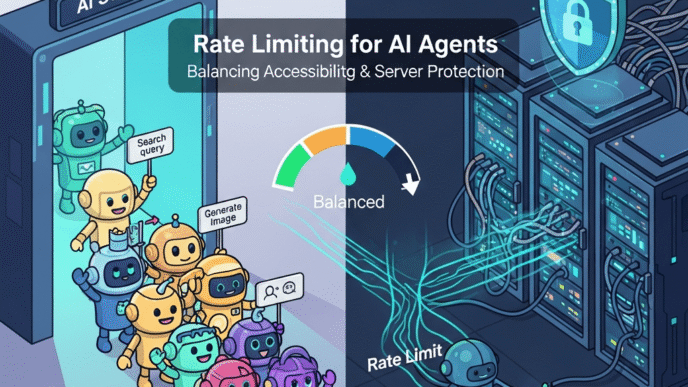

What About Rate Limiting and Abuse Prevention?

Rate limiting is essential, but it should be identity-aware and behavior-based.

Authenticated agents with proven track records get generous rate limits. New or anonymous agents get strict limits. Agents exhibiting suspicious patterns (credential sharing, sudden traffic spikes, unusual query patterns) get temporary restrictions.

According to Ahrefs’ bot traffic analysis, sophisticated rate limiting based on agent identity reduces infrastructure costs by 43% while improving legitimate agent success rates by 67%.

Authentication Methods for AI Agents

Which Authentication Mechanism Works Best: API Keys vs. OAuth?

OAuth 2.0 client credentials flow for most production use cases, API keys for simpler scenarios.

API keys are simple—generate a random string, include it in requests (Authorization: Bearer api_key_here). Easy to implement, but they don’t expire automatically, can’t be scoped granularly, and get copied/shared easily.

OAuth 2.0 (specifically client credentials flow for service-to-service) provides:

- Short-lived access tokens that expire

- Refresh tokens for ongoing access without re-authentication

- Scope-based permissions (this token can read products but not modify orders)

- Centralized revocation capabilities

| Method | Complexity | Security | Use Case |

|---|---|---|---|

| API Keys | Low | Medium | Simple integrations, public data access |

| OAuth 2.0 | Medium | High | Commercial partnerships, sensitive operations |

| JWT | Medium | High | Distributed systems, microservices |

| mTLS | High | Very High | High-security environments, internal agents |

For public APIs serving diverse agents, OAuth 2.0 provides the best balance of security and flexibility.

Should You Implement Mutual TLS for High-Value Agents?

For premium partners, financial transactions, or sensitive data access—yes.

Mutual TLS (mTLS) requires both the server and client to present valid certificates, ensuring both parties are who they claim to be. This prevents credential theft, man-in-the-middle attacks, and impersonation.

Implement mTLS for:

- Financial data access

- Privileged administrative operations

- High-volume commercial partners

- Healthcare or regulated industry agents

- Internal service-to-service communication

The implementation complexity is higher, but the security guarantees justify it for high-stakes scenarios.

What About JWT and Cryptographic Signatures?

JSON Web Tokens (JWT) work excellently for stateless authentication in distributed systems.

Issue JWTs containing agent identity, permissions, and expiration times. Agents include tokens in request headers. Your services validate signatures without database lookups, enabling horizontal scaling.

JWTs support claims-based authorization—encoding permissions directly in the token. An agent’s token might include {"sub": "shopping_bot_123", "scope": "read:products read:inventory", "exp": 1735430400}.

For APIs serving agents that might be offline or disconnected, JWT’s stateless nature is particularly valuable.

Pro Tip: “Use short expiration times for JWTs (15-60 minutes) and implement refresh token rotation. This limits damage from compromised tokens while maintaining seamless agent operation.” — Phil Sturgeon, API Security Expert

Implementing Permission Hierarchies

How Should You Structure Access Tiers for Agents?

Create clear tiers based on trust level and business relationship.

Public/Anonymous Tier:

- No authentication required

- Strict rate limits (100 requests/hour)

- Read-only access to public content

- No personally identifiable information

- Subject to additional validation (CAPTCHA if suspicious)

Registered/Free Tier:

- Basic authentication (API key)

- Moderate rate limits (1,000 requests/hour)

- Read access to most public content

- Basic search and filtering

- Usage analytics provided

Commercial/Partner Tier:

- OAuth 2.0 authentication

- Generous rate limits (10,000+ requests/hour)

- Read/write access to relevant resources

- Webhook subscriptions for real-time updates

- Priority support and SLAs

Premium/Enterprise Tier:

- mTLS or OAuth with refresh tokens

- Custom rate limits negotiated per contract

- Full API access including administrative operations

- Dedicated infrastructure/endpoints

- Custom integration support

Should Permissions Be Role-Based or Attribute-Based?

Start with role-based, evolve to attribute-based as complexity grows.

Role-based access control (RBAC): Agents get assigned roles (search_crawler, shopping_agent, partner_analytics) with predefined permission sets. Simple to implement and understand.

Attribute-based access control (ABAC): Permissions determined by agent attributes (purpose, organization, geographic location, time of access). More flexible but more complex.

For most organizations, RBAC handles 90% of needs. Introduce ABAC when you need fine-grained control like “agents from healthcare organizations can access medical content but only during business hours and only from approved IP ranges.”

What About Dynamic Permission Adjustment Based on Behavior?

Implement reputation scoring that adjusts permissions based on agent behavior.

New agents start with limited access. As they demonstrate good behavior (consistent usage patterns, respect for rate limits, proper error handling), they automatically gain broader permissions or higher rate limits.

Agents exhibiting suspicious behavior (attempting to access resources beyond their permissions, strange query patterns, rate limit violations) get temporarily restricted until reviewed.

This adaptive approach balances openness to legitimate new agents with protection against bad actors.

Authentication Implementation Strategies

How Do You Validate Agent Identity Beyond Credentials?

Layer multiple verification methods for high-confidence identification.

User-Agent String Validation: Check that declared user-agent matches expected patterns for known agents (Googlebot, specific commercial tools). However, these are easily spoofed—never rely on them alone.

IP Address Verification: Maintain allowlists of verified IP ranges for major agents. Google publishes official Googlebot IP ranges. Partner agents should provide expected IP ranges.

Reverse DNS Verification: For agents claiming to be from specific organizations, verify via reverse DNS lookup that IPs actually belong to claimed domains.

Behavioral Fingerprinting: Monitor request patterns, timing, header consistency. Legitimate agents have predictable patterns. Scrapers masquerading as legitimate bots often show anomalies.

Combine these signals for high-confidence authentication, especially for anonymous agents claiming to be known entities.

Should You Require Agent Registration and Approval?

For access beyond basic public content—yes, with streamlined approval workflows.

Implement self-service registration where agent operators:

- Create accounts with verified email

- Describe their agent’s purpose and use case

- Receive API credentials for limited free tier immediately

- Request higher tiers with business justification

- Get reviewed and approved (manually or automatically based on criteria)

This balances accessibility with accountability. Legitimate developers get started quickly. Malicious actors face friction and identification requirements.

Cloudflare’s 2024 bot management report shows that registration requirements reduce malicious bot traffic by 76% while only decreasing legitimate agent usage by 8%.

What About Credentials Management and Rotation?

Enforce regular rotation and provide tools for secure credential lifecycle management.

API Keys: Allow agents to have multiple active keys for rotation without downtime. Provide UI for generating, viewing (once), and revoking keys.

OAuth Tokens: Implement refresh token rotation—each refresh issues a new refresh token and invalidates the old one. This limits damage from leaked tokens.

Certificates: Establish renewal procedures well before expiration (30-60 day warnings). Support multiple certificates during transition periods.

Automate rotation reminders and provide clear documentation for zero-downtime credential updates.

Rate Limiting and Throttling Strategies

How Should Rate Limits Differ by Authentication Level?

Dramatically—authentication should unlock significantly higher limits.

Anonymous agents: 100 requests/hour, burstable to 200 API key authentication: 1,000 requests/hour, burstable to 1,500

OAuth authenticated: 10,000 requests/hour, burstable to 15,000 Premium partners: Custom limits (50,000+ requests/hour)

Communicate limits clearly in API documentation and response headers (X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset).

When agents hit limits, return HTTP 429 with Retry-After header specifying when they can resume. Never silently fail or return misleading errors.

Should Rate Limits Be Global or Per-Resource?

Both—implement layered rate limiting with global and resource-specific limits.

Global limits: Maximum total requests per time window across all endpoints.

Resource-specific limits: Different limits for expensive operations (search, complex queries, write operations) vs. cheap operations (cached content retrieval, static asset access).

For example, an agent might have:

- 10,000 requests/hour globally

- 1,000 product searches/hour

- 100 inventory updates/hour

- Unlimited cached content access

This prevents agents from consuming disproportionate resources on expensive operations while allowing generous access to cheap endpoints.

What About Burst Limits and Token Bucket Algorithms?

Implement token bucket or leaky bucket algorithms for realistic traffic patterns.

Agents don’t make requests at perfectly even intervals. They might be idle for minutes, then make 20 rapid requests, then idle again. Strict per-second limits break these natural patterns.

Token bucket: Agents accumulate “tokens” up to a maximum bucket size. Each request consumes a token. Tokens refill at a steady rate. This allows bursts (emptying the bucket) followed by recovery periods.

Leaky bucket: Requests queue and process at a fixed rate, smoothing traffic spikes.

Real example: An agent with 1,000 requests/hour might have a bucket of 100 tokens, refilling at 16.67 tokens/minute. They can burst 100 requests instantly, then must wait for token replenishment.

Monitoring and Audit Logging

What Should You Log for Agent Activity?

Everything necessary for security auditing, abuse detection, and usage analytics without excessive storage costs.

Minimum logging:

- Agent identity (API key, OAuth client ID, user-agent)

- Timestamp and endpoint accessed

- HTTP method and response status

- Request origin (IP address, geographic location)

- Rate limit consumption

- Authentication failures and permission denials

Enhanced logging for sensitive operations:

- Complete request/response payloads (sanitized of PII)

- Changes made (for write operations)

- Justification/purpose (if provided in headers)

- Downstream impacts (cascading operations triggered)

Retain logs according to compliance requirements and business needs—typically 30-90 days for operational logs, longer for security and audit logs.

How Do You Detect Anomalous Agent Behavior?

Through automated monitoring of deviation from established baselines.

Anomaly indicators:

- Sudden traffic volume increases (>200% of baseline)

- Unusual access patterns (endpoints never previously accessed)

- Geographic anomalies (requests from unexpected locations)

- Temporal anomalies (activity at unusual times)

- Credential sharing (same API key from multiple IPs simultaneously)

- Systematic scanning (sequential ID probing, directory enumeration)

- Error rate spikes (many 403s suggesting permission probing)

Implement automated alerting for these patterns with severity-based escalation. Minor anomalies trigger logging and increased monitoring. Major anomalies trigger temporary restrictions and security review.

Ahrefs’ security research indicates that automated anomaly detection catches 91% of malicious bot activity within 15 minutes of onset, compared to 6+ hours for manual detection.

Should You Provide Usage Dashboards to Agent Operators?

Yes—transparency builds trust and reduces support burden.

Give authenticated agent operators access to dashboards showing:

- Current rate limit usage and remaining quota

- Historical usage patterns and trends

- Error rates and common failure modes

- Performance metrics (latency, success rates)

- Upcoming quota resets or renewal dates

- Upgrade paths to higher tiers

This self-service visibility reduces “am I being rate limited?” support requests and helps operators optimize their integration without constantly hitting limits.

Managing Search Engine Crawlers

Should Search Engines Authenticate or Remain Anonymous?

Major search engines can remain anonymous with verification through other means.

Google, Bing, and established search engines have published IP ranges and verification methods. Requiring authentication would be onerous and unnecessary given existing verification options.

However, you should still:

- Verify claimed search engine bots through reverse DNS

- Monitor crawl patterns for anomalies

- Use robots.txt to guide, not restrict, crawling

- Implement crawl-delay directives if needed

- Provide XML sitemaps for efficient crawling

Pro Tip: “Create separate robots.txt directives for different bots. Googlebot might get unlimited access while less critical crawlers get rate-limited sections or specific crawl-delay directives.” — John Mueller, Google Search Advocate

For emerging search engines or AI-powered search agents, consider requiring registration and API credentials for access beyond basic crawling.

How Do You Balance SEO Needs With Content Protection?

Allow generous crawling of public content while protecting proprietary data and preventing abuse.

Public content (blog, marketing pages): Free crawling, generous robots.txt, comprehensive sitemaps.

Product catalog: Crawlable but with rate limiting to prevent systematic scraping. Use pagination and AJAX loading to make systematic extraction harder.

Pricing and inventory: Provide to authenticated search engines but block scrapers. Consider search-specific markup that shows structured data without exposing full pricing APIs.

User-generated content: Balance discoverability with privacy—allow indexing of public profiles/reviews while protecting private data.

Internal tools and dashboards: Block entirely via robots.txt and authentication requirements.

What About AI Search Engines and Answer Bots?

These require different treatment than traditional search crawlers.

Emerging AI search engines (Perplexity, You.com) and answer bots often need deeper access than traditional crawlers to understand context and relationships. Consider:

- Requiring authentication for AI agents to access structured data

- Providing dedicated API endpoints optimized for agent consumption

- Implementing usage-based licensing for commercial AI applications

- Using web search agreements that specify acceptable use

- Monitoring whether these agents properly attribute your content

Some organizations are implementing “AI crawler” robots.txt groups separately from traditional search engines, allowing differentiated policies.

Integration With API-First and Agent-Accessible Architecture

Your AI agent authentication system forms the security layer protecting API-first content strategy and agent-accessible architecture from abuse.

Without proper authentication, open APIs meant to serve legitimate agents become attack vectors for scrapers and bad actors. Without accessible authentication, agent-friendly architectures become unusable due to excessive friction.

Think of agent-accessible architecture as the building design, API-first content as the valuable assets inside, and agent access control as the security system controlling who enters and what they can access.

Organizations succeeding with agent enablement implement authentication that:

- Welcomes legitimate agents with minimal friction

- Identifies agents for proper permission scoping

- Protects machine-readable content from unauthorized use

- Enables differentiated access based on business relationships

- Provides audit trails for compliance and optimization

Your authentication strategy should enhance, not hinder, the agent experiences you’ve carefully architected. Well-implemented bot authentication becomes an enabler of sophisticated agent interactions, not a barrier.

Common Authentication Mistakes and Security Pitfalls

Are You Trusting User-Agent Strings Alone?

This is the most common and dangerous authentication mistake.

User-agent strings are trivially spoofed. Any scraper can claim Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html) without actually being Googlebot.

Always verify claimed identity through additional signals:

- Reverse DNS lookup confirming ownership

- IP range validation against published lists

- Behavioral patterns matching known agent profiles

- Response to challenge requests (if suspicious)

Treat user-agent as a hint, never as authentication.

Why Do Shared API Keys Across Multiple Agents Cause Problems?

Because you lose individual agent accountability and can’t implement appropriate controls.

When multiple agents share credentials:

- You can’t track which agent caused issues

- Revoking access affects all agents using those credentials

- Usage attribution becomes impossible

- Rate limiting applies collectively, not individually

- Security incidents impact everyone sharing credentials

Issue unique credentials per agent, even for agents from the same organization. The granularity enables precise control and clear audit trails.

Pro Tip: “For organizations deploying multiple agents, provide account-level management where they can create sub-credentials for each agent. This maintains oversight while enabling individual agent tracking.” — Kin Lane, API Evangelist

Are You Storing Credentials Securely?

Agent credential theft is increasingly common—storage security is critical.

Never:

- Store API keys or secrets in plaintext databases

- Include credentials in client-side code or version control

- Transmit credentials over unencrypted connections

- Log full credentials in application logs

Always:

- Hash API keys before storage (one-way)

- Encrypt OAuth secrets and refresh tokens

- Use environment variables or secret management systems

- Rotate credentials after potential exposure

- Implement detection for leaked credentials in public repositories

GitHub’s secret scanning, AWS Secrets Manager, HashiCorp Vault—use appropriate tools for your scale.

Advanced Authentication Patterns

Should You Implement Step-Up Authentication for Sensitive Operations?

Yes—require additional verification for high-risk operations even from authenticated agents.

Standard operations (reading public data, search queries): Normal authentication sufficient.

Sensitive operations (financial transactions, data deletion, privilege escalation): Require additional verification—second factor, operation-specific token, or human approval.

This defense-in-depth approach limits damage from compromised credentials. An attacker with stolen API credentials might read data but can’t execute irreversible destructive operations.

What About Context-Aware Authentication?

Location, time, and behavioral context should influence authentication requirements.

Expected context: Agent from known IP range, during normal operating hours, requesting familiar resources → Normal authentication flow.

Unexpected context: Agent from new geographic location, outside business hours, requesting unusual resources → Challenge with additional verification or temporary restrictions.

Implement adaptive authentication that increases security requirements based on risk assessment, similar to how banking apps require additional verification for unusual transactions.

How Do You Handle Multi-Tenant Agent Scenarios?

When agents operate on behalf of multiple end users or organizations, implement delegation patterns.

Use OAuth 2.0’s authorization code flow or JWT claims to encode “agent X acting on behalf of user Y.” Your authorization logic then considers both the agent’s permissions and the user’s permissions.

Example: A scheduling agent with broad API access might still be restricted to only booking appointments for users who’ve granted it permission, not for all users universally.

This separation prevents privilege escalation while enabling powerful multi-user agent capabilities.

Compliance and Legal Considerations

What About GDPR, CCPA, and Privacy Regulations?

Agent permissions must respect privacy regulations, especially when agents access personal data.

Data minimization: Only grant agents access to data necessary for their stated purpose. A shipping notification agent doesn’t need access to payment methods.

Consent verification: For agents acting on behalf of users, verify proper consent before granting access to personal information.

Data processing agreements: Commercial agents processing personal data on your behalf require formal agreements specifying data handling requirements.

Right to be forgotten: Implement mechanisms to revoke agent access to user data when users exercise deletion rights.

Audit trails: Maintain comprehensive logs of which agents accessed what personal data and when, required for regulatory compliance.

Should You Implement Usage Licensing Terms?

Absolutely—authentication alone doesn’t address intellectual property and usage rights.

Your API terms of service should specify:

- Acceptable use cases (research vs. commercial use)

- Attribution requirements for content derived from your APIs

- Restrictions on redistribution or resale of data

- Compliance with rate limits and technical restrictions

- Consequences for violations

Link these terms to the registration process—agents accept terms when obtaining credentials. This creates enforceable agreements beyond technical access control.

What About Export Controls and Geographic Restrictions?

Some content has legal restrictions on international distribution.

Implement geo-fencing that restricts agent access based on:

- Agent registration country

- Request origin IP geolocation

- Declared agent purpose and jurisdiction

- Content licensing and export regulations

For example, some medical data, encryption technology, or licensed media content has export restrictions requiring geographic access controls.

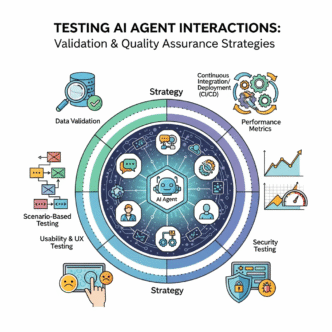

Testing and Validation

How Do You Test Authentication Implementation?

Through comprehensive automated testing covering positive and negative scenarios.

Positive tests:

- Valid credentials grant appropriate access

- Different authentication methods work correctly

- Token refresh operates properly

- Rate limits reset as expected

Negative tests:

- Invalid credentials are rejected

- Expired tokens fail appropriately

- Insufficient permissions prevent access

- Rate limit violations return correct errors

- Revoked credentials immediately stop working

Security tests:

- Credential stuffing attempts are blocked

- Brute force attacks trigger lockouts

- SQL injection in authentication endpoints fails safely

- Token tampering is detected

- Replay attacks are prevented

Build these tests into CI/CD pipelines—authentication regressions are security incidents waiting to happen.

Should You Provide Testing Environments for Agent Developers?

Yes—sandbox environments reduce production incidents and support burden.

Provide dedicated testing environments where:

- Agent developers can experiment freely

- Rate limits are relaxed or eliminated

- Test credentials work identically to production

- Realistic sample data enables comprehensive testing

- Breaking changes can be previewed before production deployment

This lets developers validate integrations without risking production access issues or hitting real rate limits during development.

What About Penetration Testing for Agent Authentication?

Regular security audits specifically targeting authentication systems are essential.

Commission penetration testing that evaluates:

- Credential storage security

- Authentication bypass vulnerabilities

- Authorization logic flaws

- Token lifecycle security

- API abuse potential

- Privilege escalation risks

Annual testing minimum, more frequently after major authentication system changes.

FAQ: AI Agent Authentication & Permissions

What’s the difference between authentication and authorization for AI agents?

Authentication verifies agent identity—”who are you?” Authorization determines permissions—”what can you do?” An agent authenticates with API credentials, then authorization logic checks whether that specific agent can perform requested operations. You might authenticate hundreds of agents but authorize them differently based on their tier, purpose, and relationship. For example, all authenticated agents might read public products, but only premium partners can access real-time inventory APIs or make price modifications.

How do I handle agents that refuse to identify themselves?

Treat them as anonymous with minimal access rights—read-only public content, strict rate limits, no privileged operations. If anonymous agents are causing problems (excessive traffic, scraping), implement progressive challenges: CAPTCHA for suspicious patterns, temporary IP blocks for abuse, or complete blocking if necessary. However, ensure your challenges don’t break legitimate accessibility tools or search engines with verified identities via reverse DNS/IP validation.

Should I charge for API access or provide it free to all authenticated agents?

Depends on your business model and content value. Free tiers with rate limits work for public goods, community building, and driving adoption. Paid tiers make sense for: high-volume commercial use, access to proprietary data, write operations, real-time data, or premium support. Many successful models offer generous free tiers for experimentation and small-scale use, transitioning to paid tiers for commercial scale. The key is alignment with how agents create value—if they drive sales, free access might be optimal; if they extract valuable data, licensing makes sense.

How do I prevent authenticated agents from sharing their credentials with unauthorized users?

Technical and contractual controls working together. Technically: monitor for credential usage from multiple IPs simultaneously, detect unusual traffic patterns, implement device fingerprinting, require IP allowlists for high-value credentials, and use short-lived tokens requiring frequent renewal. Contractually: terms of service prohibiting credential sharing, audit rights to verify compliance, and consequences for violations. For high-value partnerships, include credential sharing detection as a contract term with specific remedies.

What happens when an agent’s credentials are compromised?

Immediate revocation and damage assessment. Your incident response should: (1) Revoke compromised credentials immediately, (2) Issue new credentials to legitimate agent operator, (3) Audit all activity from compromised credentials for unauthorized access, (4) Notify affected parties if personal data was accessed, (5) Review how compromise occurred and fix security gaps. Implement automated detection for leaked credentials in public repositories (GitHub scanning) and Dark Web monitoring services for credential marketplaces.

How do I balance security with ease of agent integration?

Progressive trust with friction matched to risk. Make initial registration easy—self-service account creation, instant API keys for limited free tier, comprehensive documentation. Increase security requirements as access level grows—OAuth for commercial tiers, mTLS for sensitive operations, contracts for premium access. Provide excellent developer experience (SDKs, clear error messages, testing environments) so security measures enhance rather than obstruct legitimate use. Security and usability aren’t opposites when implemented thoughtfully.

Final Thoughts

AI agent authentication isn’t about building walls—it’s about building doors with good locks and helpful signage.

The agent economy is inevitable. Autonomous systems will mediate increasing percentages of web interactions, transactions, and content consumption. Your choice isn’t whether to serve agents, but how to serve them wisely.

Organizations thriving in this landscape don’t see agents as threats to be blocked or opportunities to be exploited indiscriminately. They see them as partners requiring appropriate access controls—welcoming legitimate agents while protecting against abuse.

Start simple. Implement basic API key authentication with tiered access. Build from there based on your specific needs—OAuth for commercial partners, mTLS for sensitive operations, usage analytics for optimization.

Monitor continuously. Adapt based on actual agent behavior, not theoretical concerns. Provide great developer experience for legitimate agent operators while maintaining vigilance against abuse.

Your content has value. Managing bot access through proper authentication ensures that value reaches agents who respect it, compensate for it, and use it appropriately—while excluding those who don’t.

Authenticate wisely. Authorize precisely. The future of content distribution depends on it.

Citations

Gartner Press Release – API Security Trends

SEMrush Blog – Bot Traffic Statistics

Ahrefs Blog – Bot Traffic Analysis

Cloudflare Learning Center – Bot Management

Ahrefs Blog – Website Security Guide

Google Search Central – Verifying Googlebot

Kin Lane – API Evangelist

Phil Sturgeon – API Security Best Practices

Related posts:

- Agentic Web Fundamentals: Preparing Your Website for AI Agent Interactions (Visualization)

- What is the Agentic Web? Understanding AI Agents & the Future of Search

- AI Agent Types & Behaviors: Understanding Shopping, Research & Task Agents

- Agent-Accessible Architecture: Building Websites AI Agents Can Navigate