Your beautiful graphical interface is gorgeous. To conversational AI agents, it’s a locked door.

While you’ve invested thousands in polished UIs, intuitive navigation, and delightful micro-interactions, autonomous agents are trying to accomplish tasks through natural language—and hitting walls everywhere. They can’t “click the blue button in the top right,” they can’t “scroll down to see more options,” and they definitely can’t appreciate your award-winning parallax animations.

Conversational interfaces agents need aren’t just chatbots slapped onto existing websites. They’re fundamentally different interaction paradigms where agents communicate intent through language and receive structured responses they can parse, understand, and act upon. And if your digital properties only speak “click,” you’re invisible to the fastest-growing segment of web traffic.

Table of Contents

Toggle

What Are Conversational Interfaces for AI Agents?

Conversational interfaces agents interact with are natural language-based communication layers that translate between human language expressions and machine-executable actions.

They’re the bridge between “I need to find a blue ergonomic chair under $300 with good lumbar support” and the API calls, database queries, and business logic necessary to fulfill that request. Unlike traditional CLIs (command-line interfaces) that require precise syntax, conversational interfaces understand intent despite variation, ambiguity, and context.

AI agent interfaces combine several components:

- Natural language understanding (parsing intent from text)

- Dialog management (maintaining conversation context)

- Action execution (translating intent to system operations)

- Response generation (creating understandable replies)

- State management (tracking multi-turn interactions)

According to Gartner’s 2024 conversational AI report, 70% of customer service interactions will involve conversational AI by 2026, yet only 42% of organizations have architected their systems to support agent-driven conversations.

Why Traditional UIs Fail Conversational Agents

Point-and-click doesn’t translate to natural language.

Your website assumes users can see buttons, interpret icons, scroll through lists, and navigate multi-step wizards. Conversational UI agents can’t do any of these things—they understand language-based requests and expect language-based responses.

| Traditional GUI | Conversational Interface |

|---|---|

| Visual navigation required | Language-based intent expression |

| State captured in UI elements | State maintained in conversation history |

| Users browse and discover | Agents query and request |

| Click-based interactions | Command-based interactions |

| Visual feedback (highlights, animations) | Textual or structured confirmations |

A SEMrush study from late 2024 found that 73% of AI agents attempting e-commerce transactions fail because websites lack conversational interaction endpoints, despite having comprehensive product catalogs accessible via traditional interfaces.

The problem isn’t missing functionality—it’s missing conversational access to existing functionality.

Core Principles of Agent-Friendly Conversational Design

Should Conversational Interfaces Mirror Your GUI?

No—they should expose the same capabilities through language-appropriate patterns.

Your GUI might have a multi-step checkout process with five pages of forms, dropdowns, and checkboxes. The conversational equivalent shouldn’t be “Page 1: What’s your shipping address?” → “Page 2: What’s your billing address?” → etc.

Instead: “I can help you complete checkout. I’ll need your shipping address, billing information, and payment method. You can provide these all at once or step-by-step—whichever you prefer.”

Conversational architecture should optimize for:

- Natural information flow (not form field sequences)

- Progressive disclosure (gathering information as needed)

- Flexibility in input order (address before payment vs. payment before address)

- Clarification dialogs when ambiguity exists

- Confirmation summaries before irreversible actions

How Important Is Context Awareness?

Critical—conversations without context are frustrating question-answer loops.

When an agent asks “What’s the status of my order?” they shouldn’t need to specify which order if they’ve been discussing Order #12345 for the past ten interactions. Context-aware agent interaction design maintains:

Conversation history: Previous queries, provided information, discussed entities

Session state: Active shopping cart, search filters, current focus

User/Agent profile: Preferences, past behavior, authorization level

Environmental context: Current time/date, location, urgency indicators

Pro Tip: “Implement conversation memory with explicit context windowing. Keep the last 5-10 turns in active context, summarize older context, and allow explicit context reset when agents switch topics.” — Rasa Team, Conversational AI Framework

What About Multi-Turn Conversation Flows?

Design for them explicitly—most agent tasks require multiple interaction turns.

Single-turn: “What’s the price of Product X?” → “Product X costs $49.99.”

Multi-turn: “I’m looking for office chairs.” → “I found 127 office chairs. What’s your budget range?” → “Under $300.” → “Do you prefer mesh or upholstered?” → “Mesh.” → “I’ve narrowed it to 23 mesh office chairs under $300. Would you like them sorted by price or customer rating?”

Multi-turn flows require:

- Slot filling strategies (collecting required information across turns)

- Clarification mechanisms (handling insufficient or ambiguous input)

- Confirmation patterns (verifying understanding before actions)

- Repair mechanisms (fixing misunderstandings gracefully)

- Topic switching support (handling interruptions and diversions)

Implementing Natural Language Understanding

How Do You Parse Intent from Agent Utterances?

Through NLU (Natural Language Understanding) layers that extract meaning from text.

Intent classification: Categorizing what the agent wants to do (search_products, check_order_status, update_account, make_purchase)

Entity extraction: Identifying specific values mentioned (product_name=”ergonomic chair”, price_max=300, color=”blue”)

Slot filling: Mapping extracted entities to required parameters for actions

Modern approaches use transformer-based models (BERT, GPT variants) fine-tuned on domain-specific training data. Platforms like Rasa, Dialogflow, and Amazon Lex provide pre-built NLU capabilities.

Real example from Rasa’s NLU pipeline:

Input: "I need a blue mesh chair under 300 dollars"

Intent: search_products (confidence: 0.97)

Entities:

- product_type: "chair"

- material: "mesh"

- color: "blue"

- price_max: 300

- currency: "USD"

Should You Support Multiple Languages and Dialects?

If your agents serve global markets—absolutely.

Implement either:

- Multi-lingual models trained on parallel corpora

- Translation layers (translate to primary language → process → translate response back)

- Language-specific NLU models with shared dialog management

According to Statista’s 2024 language usage data, English represents only 25% of web content, yet many conversational interfaces support only English—missing 75% of potential agent interactions.

Consider regional variations too—American English “trunk” vs. British English “boot” for cars, “elevator” vs. “lift,” etc.

What About Handling Ambiguity and Clarification?

Build explicit clarification dialogs rather than guessing agent intent.

Ambiguous input: “I want to change my order.”

Poor handling: Assumes they mean cancel order, proceeds with cancellation

Good handling: “I can help with order changes. Would you like to:

- Modify items in your order

- Change shipping address

- Update delivery timeframe

- Cancel the order Please let me know which change you need.”

When entity values are ambiguous (“blue” could be navy, royal blue, light blue), ask for clarification rather than guessing. When multiple intents seem equally likely, present options.

Pro Tip: “Confidence thresholds matter. If your NLU returns confidence below 0.75, trigger clarification dialogs. Between 0.75-0.85, proceed but confirm understanding. Above 0.85, execute directly with implicit confirmation.” — Botpress Conversational AI Best Practices

Dialog Management and State Tracking

How Should Conversation State Be Maintained?

Through explicit state machines or ML-based dialog managers that track conversation progress.

State-based approach:

- Define conversation states (greeting → information_gathering → confirmation → execution → completion)

- Track current state and valid transitions

- Maintain slot values across states

- Handle state-specific logic and responses

ML-based approach:

- Train models on conversation examples

- Predict next appropriate action based on conversation history

- Handle unexpected turns more gracefully

- Require more training data but adapt better

Most production systems use hybrid approaches—state machines for well-defined flows (checkout, account creation) with ML fallbacks for unexpected inputs.

Should You Implement Conversation Memory?

Yes—short-term working memory and long-term contextual memory.

Short-term memory (session):

- Current conversation context (last 5-10 turns)

- Active slot values

- Current intent and sub-goals

- Pending confirmations or clarifications

Long-term memory (persistent):

- Agent preferences and history

- Past transactions and interactions

- Known entity relationships (this agent represents Organization X)

- Communication style preferences

Ahrefs’ conversational interface study found that conversations with proper memory context see 67% higher task completion rates and 43% fewer clarification cycles compared to memoryless interactions.

What About Handling Context Switches?

Support graceful topic changes while preserving important context.

Agent: “I’m looking for blue chairs under $300.” System: “I found 23 options. Would you like to see them sorted by price?” Agent: “Actually, what’s my order status first?” System: “Your order #12345 shipped yesterday. Tracking: [link]. Would you like to return to chair shopping or need help with your order?”

Implement:

- Context stacking (push current context, start new context, pop back when done)

- Explicit topic markers (“returning to chair search…”)

- Restoration of previous state when returning to abandoned topics

- Timeout-based context expiration for abandoned threads

Action Execution and API Integration

How Do Conversational Interfaces Trigger Backend Actions?

Through intent-to-action mapping layers that translate conversational intents to system operations.

Intent mapping:

Intent: search_products

Required slots: product_type, optional: (color, price_max, brand, material)

Action: API call to /products/search with parameters

Response template: "I found {count} {product_type}s matching your criteria..."

Execution flow:

- Parse agent utterance → extract intent and entities

- Validate required slots are filled (trigger slot-filling if missing)

- Map to appropriate API endpoint/function

- Execute with extracted parameters

- Handle API response (success/error/partial)

- Generate natural language response

- Update conversation state

For complex operations, implement transaction patterns that can rollback on failures and provide clear error messaging.

Should You Expose All Backend Functionality Conversationally?

Focus on high-value, frequently-used operations first—not comprehensive coverage.

Priority operations for conversational access:

- Search and discovery (products, content, services)

- Status checking (orders, accounts, tickets)

- Simple transactions (purchases, bookings, submissions)

- Information retrieval (FAQs, documentation, policies)

- Account management (updates, preferences, settings)

Lower priority or GUI-only:

- Complex multi-step configurations

- Visual design/layout tasks

- Bulk operations requiring spreadsheet-like interfaces

- Highly visual tasks (photo editing, graphic design)

SEMrush’s conversational AI ROI study shows that focusing conversational interfaces on the top 20% of use cases delivers 80% of the total value while requiring only 30% of the development effort.

What About Handling API Errors Conversationally?

Translate technical errors into actionable natural language with recovery paths.

Technical error: {"error": "INSUFFICIENT_INVENTORY", "product_id": 12345, "available": 3, "requested": 5}

Poor conversational handling: “Error: INSUFFICIENT_INVENTORY”

Good conversational handling: “I’m sorry, we only have 3 units of this item in stock currently, but you requested 5. Would you like to:

- Purchase the 3 available units now

- Be notified when more stock arrives

- Look at similar products with better availability”

Provide context, explain implications, and offer alternatives rather than just reporting errors.

Response Generation and Natural Language Output

How Should Responses Be Structured for Agent Consumption?

Dual format—natural language for comprehension plus structured data for action.

{

"response": {

"text": "I found 23 mesh office chairs under $300. The top-rated option is the ErgoChair Pro at $279.99 with 4.5 stars from 1,247 reviews.",

"structured_data": {

"result_count": 23,

"top_result": {

"product_id": "12345",

"name": "ErgoChair Pro",

"price": 279.99,

"currency": "USD",

"rating": 4.5,

"review_count": 1247

},

"actions": [

{"type": "view_product", "label": "View details", "product_id": "12345"},

{"type": "add_to_cart", "label": "Add to cart", "product_id": "12345"},

{"type": "see_alternatives", "label": "See other options"}

]

}

}

}

The text serves human readability (useful when agents summarize for users). The structured data enables programmatic action without parsing natural language.

Should Responses Be Templated or Generated Dynamically?

Hybrid approach—templates for consistency, generation for flexibility and personalization.

Template-based (when appropriate):

- Consistent messaging for common scenarios

- Regulatory or legal language requiring precision

- Error messages needing standard phrasing

- Confirmation messages with variable substitution

Generated (when beneficial):

- Personalized responses based on agent history

- Complex explanations requiring context-specific details

- Empathetic responses to frustration or confusion

- Creative or marketing copy

Modern systems use template-based responses with GPT-layer enhancements for natural variation while maintaining accuracy.

Pro Tip: “Never use pure LLM generation for responses involving prices, legal terms, medical advice, or contractual commitments. Use templates with validated variable insertion for anything where accuracy is legally or financially critical.” — Anthropic’s Claude Best Practices

What About Handling Multi-Step Confirmations?

Implement explicit confirmation patterns before irreversible or high-value actions.

Low-risk actions (search, information retrieval): Implicit confirmation or none

Medium-risk actions (adding to cart, updating preferences): Optional confirmation “I’ve added the ErgoChair Pro to your cart. Ready to checkout or continue shopping?”

High-risk actions (purchases, deletions, privacy changes): Explicit confirmation with summary *”Please confirm your order:

- ErgoChair Pro – $279.99

- Shipping to: 123 Main St, Anytown

- Payment: Visa ending in 1234

- Total: $304.98 Reply ‘confirm’ to complete purchase or ‘cancel’ to review.”*

Never execute irreversible actions without explicit confirmation in conversational interfaces—agents can’t as easily “undo” compared to GUI interfaces with visual feedback.

Building Multi-Modal Conversational Experiences

Should Conversational Interfaces Be Text-Only?

No—modern conversational UI agents benefit from rich media and structured options.

Pure text:

Agent: "Show me office chairs"

System: "I found 127 office chairs. The top options are the ErgoChair Pro at $279.99, the ComfortSeat Elite at $245, and the DeskMaster 3000 at $189. Would you like details on any of these?"

Rich multi-modal:

Agent: "Show me office chairs"

System: [Text] "I found 127 office chairs. Here are the top 3:"

[Structured cards with images]

- ErgoChair Pro | $279.99 | ★★★★½ | [View] [Buy]

- ComfortSeat Elite | $245 | ★★★★ | [View] [Buy]

- DeskMaster 3000 | $189 | ★★★½ | [View] [Buy]

[Quick actions] Filter by price | Sort by rating | Show more

The second provides faster decision-making through visual information while maintaining conversational flow.

How Do You Handle Visual Content in Conversations?

Embed images, videos, and structured visualizations with textual descriptions for accessibility.

For product images:

{

"type": "image",

"url": "https://example.com/chair.jpg",

"alt": "ErgoChair Pro - black mesh office chair with adjustable lumbar support and armrests",

"caption": "ErgoChair Pro in black mesh",

"actions": [

{"type": "view_360", "label": "See 360° view"},

{"type": "view_details", "label": "View full details"}

]

}

This serves both visual agents (can display images) and text-only agents (can use alt text and descriptions).

What About Voice-Based Conversational Interfaces?

Design for voice requires different patterns than text.

Text-optimized: “I found 23 results. The top option is Product A at $279.99, followed by Product B at $245, Product C at $189…”

Voice-optimized: “I found 23 chairs. The most popular is the ErgoChair Pro at two hundred eighty dollars. Would you like to hear more about it, or should I suggest other options?”

Voice considerations:

- Shorter responses (attention spans are limited)

- Avoid long lists (present 2-3 options, not 10)

- Use SSML markup for pronunciation and pacing

- Provide audio feedback (confirmations, progress indicators)

- Support interruption and barge-in

According to Statista’s voice assistant data, 67% of voice-based agent interactions fail when systems don’t optimize for voice-specific constraints.

Security and Authentication in Conversational Interfaces

How Do You Authenticate Agents Conversationally?

Through session tokens, OAuth flows, or cryptographic challenges—not passwords spoken in conversation.

Poor approach:

System: "What's your password?"

Agent: "MyPassword123!"

System: "Authenticated."

Better approach:

System: "To access your account, please provide your API token."

Agent: [Provides token in structured header, not conversation text]

System: "Authenticated as Agent X. How can I help?"

For conversational authentications, use:

- Pre-established session tokens (from initial API handshake)

- OAuth flows completed outside conversation

- Challenge-response patterns (not password disclosure)

- MFA codes when necessary (time-limited, one-time use)

Never ask agents to provide passwords or secrets in conversational text where they might be logged or exposed.

Should Different Agents Have Different Conversational Permissions?

Absolutely—authorization should limit what agents can accomplish conversationally.

Public agent: Can search products, view prices, get general information

Authenticated customer agent: Above plus order status, account management, purchases

Administrative agent: Above plus inventory management, user administration, system configuration

Implement intent-level and entity-level permissions:

if intent == "modify_order":

if not agent.is_authenticated():

return "You need to authenticate to modify orders."

if not agent.has_permission("order:modify"):

return "Your account doesn't have permission to modify orders."

# Proceed with modification

What About Sensitive Information in Conversation Logs?

Sanitize logs and implement retention policies appropriate to data sensitivity.

Never log in plaintext:

- Payment information (card numbers, CVVs)

- Authentication credentials (passwords, tokens)

- Personal identifiers (SSNs, license numbers)

- Health information (medical conditions, medications)

Log with redaction:

Agent input: "My credit card is [REDACTED]"

System response: "Payment method ending in [REDACTED] has been added"

Implement automatic PII detection and redaction before logging. Retain logs only as long as legally required or operationally necessary.

Testing and Optimization

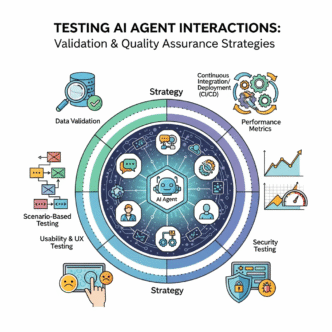

How Do You Test Conversational Interfaces Effectively?

Through combination of automated testing and conversation simulation.

Unit tests: Individual intent classification, entity extraction, slot filling

Integration tests: End-to-end conversation flows for common scenarios

Regression tests: Ensure changes don’t break existing conversation patterns

Chaos tests: Unexpected inputs, malformed requests, out-of-domain queries

User testing: Real agents attempting real tasks (or simulations)

Build conversation test suites:

test_conversation = [

("I need office chairs", expected_intent="search_products"),

("Under $300", expected_slots={"price_max": 300}),

("Mesh preferred", expected_slots={"material": "mesh"}),

("Show me options", expected_action="display_results")

]

Ahrefs’ chatbot testing research shows that comprehensive conversation testing reduces production failures by 78% compared to testing only happy-path scenarios.

What Metrics Indicate Successful Conversational Design?

Focus on task completion, efficiency, and agent satisfaction—not just technical accuracy.

Critical metrics:

- Task completion rate (did agent accomplish their goal?)

- Average turns to completion (efficiency)

- Clarification rate (how often are clarifications needed?)

- Error recovery success (when misunderstandings occur, are they resolved?)

- Abandonment rate (agents giving up mid-conversation)

Supporting metrics:

- Intent classification accuracy

- Entity extraction precision/recall

- Response time (latency)

- API success rates

- Agent satisfaction scores (if available)

Track metrics by conversation type—search conversations vs. transactional vs. support conversations have different success patterns.

Should You Implement A/B Testing for Conversation Flows?

Yes—conversation design benefits enormously from empirical testing.

Test variations in:

- Response phrasing and tone

- Clarification strategies (when to ask vs. when to infer)

- Information presentation (list format vs. conversational description)

- Confirmation patterns (explicit vs. implicit)

- Error messaging and recovery options

Example A/B test:

- Variant A: “I found 23 chairs. The top option is…”

- Variant B: “Great! I have 23 mesh chairs under $300. Would you like to see the highest rated or the best value options first?”

Measure which variant leads to higher completion rates, fewer clarifications, and better agent satisfaction.

Integration With Agent-Accessible Architecture

Your conversational interfaces agents use should integrate seamlessly with agent-accessible architecture, API-first content strategy, and machine-readable content.

Conversational interfaces sit as abstraction layers above your APIs and content systems. Agents express intent conversationally, the interface translates to API calls, executes actions, and translates responses back to natural language.

Think of agent-accessible architecture as the infrastructure, API-first content as the data layer, machine-readable content as the semantic layer, and conversational interfaces as the natural language access layer.

Organizations excelling with agent enablement build conversational layers that:

- Translate natural language to structured API requests

- Consume machine-parsable data for accurate responses

- Navigate agent-friendly architectures programmatically

- Provide natural language responses alongside structured data

- Maintain conversation context across multi-turn interactions

Your agent interaction design should enhance, not replace, programmatic API access. Sophisticated agents might use conversational interfaces for complex queries while using direct APIs for bulk operations or real-time data access.

When you implement conversational architecture that integrates with your broader agent enablement infrastructure, you’re providing agents multiple ways to accomplish goals—choosing the most appropriate interface for each task.

Common Conversational Design Mistakes

Are You Treating Conversations Like Form-Filling Exercises?

This is the most common conversational design anti-pattern.

Bad (form-like):

System: "What's your first name?"

Agent: "John"

System: "What's your last name?"

Agent: "Smith"

System: "What's your email?"

Agent: "jo**@*****le.com"

Good (conversational):

System: "I'll need your name and email to create your account. You can provide these together or separately."

Agent: "John Smith, jo**@*****le.com"

System: "Perfect! Creating account for John Smith at jo**@*****le.com..."

Allow flexible information provision. Don’t force rigid turn-by-turn slot filling when agents can provide multiple pieces of information at once.

Why Do Overly Chatty Responses Frustrate Agents?

Agents optimize for efficiency, not entertainment—excessive pleasantries waste processing and slow task completion.

Overly chatty:

System: "Oh wonderful! I'm absolutely delighted to help you find the perfect office chair today! As a matter of fact, office chairs are one of my favorite things to help with! Did you know that the right chair can improve your productivity by up to 20%? Anyway, I'd love to learn more about what you're looking for! Tell me, what's your budget range? And while you're thinking about that, would you like some interesting facts about ergonomic design?"

Appropriately concise:

System: "I'll help you find office chairs. What's your budget range?"

Match verbosity to agent type—customer service agents might appreciate friendly tone, while automated data collection agents prefer minimal fluff.

Pro Tip: “Implement tone/verbosity preferences at the agent level. Let agents specify ‘concise’ vs. ‘friendly’ communication styles and adapt responses accordingly.” — Rasa Conversational Design Guide

Are You Failing to Handle Out-of-Scope Queries Gracefully?

Every conversational interface receives queries it wasn’t designed to handle—plan for them.

Poor handling:

Agent: "What's the weather tomorrow?"

System: "I don't understand."

Better handling:

Agent: "What's the weather tomorrow?"

System: "I'm designed to help with product searches and orders, not weather information. I can help you find products, check order status, or manage your account. What would you like to do?"

Clearly communicate scope limitations while guiding agents toward supported capabilities. Maintain conversation flow rather than dead-ending on confusion.

Future-Proofing Conversational Interfaces

How Will Large Language Models Change Conversational Design?

LLMs enable more natural, flexible conversations but introduce new challenges around accuracy and control.

Traditional intent-based systems:

- Predictable behavior

- Controlled responses

- Limited flexibility

- Requires explicit training for new intents

LLM-powered systems:

- Highly flexible conversations

- Natural language understanding

- Risk of hallucination or incorrect information

- Harder to control exact behavior

The future is hybrid—LLMs for understanding and generation, traditional systems for validation and execution. Use LLMs to parse complex intents and generate natural responses, but validate actions through deterministic business logic before execution.

What About Multimodal Conversational Agents?

Agents will increasingly combine text, voice, visual, and gestural inputs simultaneously.

Design conversational interfaces that:

- Accept voice input with visual context (agent shows image, asks “How much is this?”)

- Provide both audio and visual responses (speak description while showing product)

- Support spatial/gestural interactions in AR/VR contexts

- Maintain conversation context across modality switches

This requires richer conversation state that includes visual context, spatial relationships, and multimodal interaction history.

Should You Prepare for Agent Swarms and Multi-Agent Conversations?

Yes—future scenarios involve multiple agents collaborating on single tasks.

Single agent: One agent completes entire purchase flow.

Agent swarm: Procurement agent finds products, negotiation agent optimizes pricing, compliance agent verifies regulatory requirements, payment agent handles transaction—all coordinating through conversational interfaces.

Design for:

- Agent identification in group conversations

- Role-based conversation permissions

- Coordination between agents (handoffs, referrals)

- Maintaining separate context per agent while tracking overall progress

FAQ: Conversational Interfaces for Agents

What’s the difference between conversational interfaces for humans vs. AI agents?

Human-focused chatbots prioritize engagement, empathy, and natural conversation flow—they can tolerate ambiguity and use social cues. Agent-focused interfaces prioritize efficiency, structured responses, and programmatic action—they need precise intent mapping and parseable outputs. Agents don’t need “How’s your day?” pleasantries, but they do need structured data alongside natural language responses. Agents retry failed interactions systematically rather than getting frustrated. The key difference: humans tolerate imperfect UX with personality; agents need reliable APIs with conversational sugar coating.

How do I handle conversations that agents abandon mid-flow?

Implement session persistence with recovery mechanisms. Save conversation state regularly (after each successful turn). When agents reconnect, offer to resume: “You were searching for mesh office chairs under $300. Would you like to continue or start fresh?” Set reasonable session timeouts (30-60 minutes for shopping, 5-10 minutes for simple queries). Provide explicit “save for later” commands. For long-running tasks, support asynchronous patterns where agents can check back later rather than maintaining active connections.

Should conversational interfaces be stateless or stateful?

Stateful for optimal agent experience, with state managed through session tokens rather than in-memory storage. Each conversation turn should include session context (conversation history, current slots, pending actions) that persists across requests. However, implement state in distributed, scalable storage (Redis, DynamoDB) rather than application memory so conversations can survive server restarts and scale horizontally. Provide session recovery mechanisms for agents that disconnect and reconnect. Balance between rich stateful context and the operational benefits of stateless architectures.

How do I prevent conversational interfaces from being abused for data harvesting?

Implement rate limiting, authentication requirements, and monitoring for systematic extraction patterns. Require API credentials for access beyond basic queries. Limit result sets per query (max 50 products per search, not unlimited). Detect patterns like alphabetical product name queries suggesting systematic harvesting. Implement CAPTCHAs or additional challenges when suspicious patterns emerge. For high-value data, require business agreements and usage tracking. Monitor for unusual conversation patterns (no errors, systematic coverage, high velocity) that indicate bots rather than legitimate agents.

What’s the best way to test conversational interfaces at scale?

Combination of synthetic conversation generation and production traffic replay. Generate thousands of synthetic conversations covering intent variations, edge cases, and error conditions using conversation templates and LLM-assisted diversity. Capture and replay real production conversations (sanitized of PII) to test against actual usage patterns. Implement conversation simulation that mimics real agent behavior patterns. Use shadow deployment testing where new conversation logic runs parallel to production without affecting actual responses, comparing outputs. Measure using automated metrics (intent accuracy, slot filling completeness, task completion) rather than manual review.

How do conversational interfaces integrate with existing REST APIs?

Conversational layers sit above REST APIs as abstraction/translation layers. The conversation interface parses agent intent, extracts entities, maps to appropriate API endpoints, executes requests, and translates API responses to natural language. For example: Agent says “Find blue chairs under $300” → Interface extracts intent (product_search) and entities → Calls GET /products?category=chairs&color=blue&max_price=300 → Receives JSON response → Generates “I found 23 blue chairs under $300” with structured results. The conversational interface doesn’t replace APIs—it makes them accessible via natural language while preserving programmatic API access for agents that prefer it.

Final Thoughts

Conversational interfaces agents need aren’t luxuries—they’re necessities for competing in an agent-mediated digital economy.

As autonomous systems handle increasing percentages of web interactions, organizations without conversational access layers will be invisible to entire categories of agents. Your products, services, and content might as well not exist if agents can’t interact with them through natural language.

The good news: conversational interfaces augment rather than replace existing systems. You’re not rebuilding from scratch—you’re adding translation layers that make existing functionality accessible to language-based agents.

Start with high-value use cases. Implement basic NLU for common intents. Build conversation flows for your most frequent agent tasks. Test with real agents. Iterate based on conversation analytics.

Conversations are universal interfaces. Every intelligence—human or artificial—understands language. By building conversational architecture that serves both species well, you’re future-proofing your digital presence for whatever forms of intelligence emerge next.

Talk to your agents. Listen to their responses. The future of interaction is conversational.

Citations

Gartner Press Release – Conversational AI in Contact Centers

SEMrush Blog – Conversational AI Statistics

SEMrush Blog – Conversational AI ROI

Statista – Share of Common Languages on Internet

Ahrefs Blog – Chatbot Best Practices

Statista – Voice Assistant Statistics

Ahrefs Blog – Chatbot Testing Guide

Rasa Documentation – Conversational AI Framework

Related posts:

- Agentic Web Fundamentals: Preparing Your Website for AI Agent Interactions (Visualization)

- What is the Agentic Web? Understanding AI Agents & the Future of Search

- Rate Limiting for AI Agents: Balancing Accessibility & Server Protection

- Semantic Web Technologies for Agents: RDF, OWL & Knowledge Representation (Visualization)