Your agent-optimized website is ready. For 2024.

You’ve implemented structured data, built agent-friendly navigation, exposed APIs, and tested with current autonomous systems. Congratulations—you’re now competitive with yesterday’s requirements. Meanwhile, the next generation of AI agents is already emerging with capabilities that will make today’s optimizations look quaint within 18 months.

Future-proofing agentic web properties isn’t about predicting the unpredictable—it’s about building flexible, adaptable architectures that can evolve with rapidly advancing agent capabilities. And if you’re optimizing for Googlebot-level intelligence while GPT-5, Claude 4, and unknown-startup-agents approach human-level reasoning, you’re building for the past.

Table of Contents

Toggle

What Does Future-Proofing for Agents Mean?

Future-proofing agentic web infrastructure means designing systems, content, and interactions that remain effective as agent capabilities rapidly evolve across multiple dimensions.

It’s building for adaptation. While traditional future-proofing meant graceful degradation and progressive enhancement, preparing for future agents requires anticipating quantum leaps in:

- Reasoning complexity (from keyword matching to nuanced understanding)

- Multimodal processing (text, images, video, audio, spatial data simultaneously)

- Autonomous decision-making (from scripted workflows to adaptive strategies)

- Collaborative intelligence (agent swarms coordinating on tasks)

- Real-time learning (agents improving from each interaction)

- Ethical reasoning (understanding context, bias, and appropriate behavior)

According to Gartner’s 2024 AI predictions, by 2028, 33% of enterprise software will include agentic AI with capabilities exceeding current autonomous systems by orders of magnitude—yet only 12% of organizations are designing infrastructure to support this evolution.

Understanding the Evolution Trajectory

Where Are Agent Capabilities Heading?

Multiple simultaneous capability frontiers advancing at different rates.

Current state (2024-2025):

- Narrow task automation (price checking, content aggregation)

- Scripted workflows with limited adaptation

- Single-modality processing (primarily text)

- Individual agent operation

- Rule-based decision making

- Static knowledge bases

Near-term evolution (2025-2027):

- Broad task completion across domains

- Adaptive problem-solving with learning

- Dual-modality integration (text + images commonly)

- Basic agent collaboration

- Contextual decision-making

- Continuously updated knowledge

Medium-term future (2027-2030):

- Complex multi-step autonomous operations

- Creative problem-solving and strategy

- Full multimodal understanding (text, image, video, audio, 3D)

- Sophisticated agent swarms

- Ethical and nuanced reasoning

- Real-time knowledge synthesis

A McKinsey report from late 2024 projects that next-gen AI agents will handle 45% of current knowledge work tasks by 2030, requiring digital properties to support dramatically more sophisticated interactions.

What Technological Shifts Enable This Evolution?

Foundational technology advances driving agent capability expansion.

Model scale and quality:

- Parameter counts growing 10x every 18-24 months

- Training data quality and diversity improving

- Multimodal pre-training becoming standard

- Specialized domain models proliferating

Architectural innovations:

- Mixture-of-experts enabling specialized reasoning

- Retrieval-augmented generation improving knowledge access

- Constitutional AI and alignment research enhancing safety

- Tool use and API calling becoming native capabilities

Infrastructure improvements:

- Edge computing enabling local agent processing

- 5G/6G reducing latency for real-time interactions

- Quantum computing (experimental) enabling new problem classes

- Decentralized systems supporting privacy-preserving agents

Pro Tip: “Design for capability expansion, not capability limits. Assume agents will be 10x more capable in 3 years across every dimension—reasoning, speed, multimodality, collaboration.” — OpenAI Research Directions

How Will Agent-Human Collaboration Evolve?

From agents as tools to agents as colleagues.

Current paradigm:

- Humans direct agents explicitly

- Agents execute narrow tasks

- Limited context awareness

- No persistent memory across sessions

Emerging paradigm:

- Agents anticipate needs

- Agents manage complex projects

- Rich contextual understanding

- Persistent personalized knowledge

Future paradigm:

- Agents as strategic partners

- Proactive problem identification

- Deep relationship understanding

- Continuous collaborative learning

This evolution requires digital properties that support increasingly sophisticated agent autonomy while maintaining appropriate human oversight.

Core Principles of Future-Proof Architecture

Should You Design for Unknown Capabilities?

Yes—build flexible systems that can incorporate unforeseen agent capabilities.

Anti-pattern (capability-specific design):

# Hardcoded for current agent types

if agent_type == 'search_crawler':

return html_response()

elif agent_type == 'shopping_bot':

return json_response()

elif agent_type == 'api_consumer':

return rest_api_response()

# What about agent types that don't exist yet?

Future-proof pattern (capability negotiation):

# Agent declares capabilities, system adapts

def serve_content(request):

agent_capabilities = detect_capabilities(request)

# Support multiple capability dimensions

if agent_capabilities.supports('multimodal'):

include_rich_media_metadata()

if agent_capabilities.supports('reasoning'):

include_causal_relationships()

if agent_capabilities.supports('collaboration'):

expose_multi_agent_coordination_apis()

# Negotiate format based on declared preferences

optimal_format = negotiate_format(

agent_capabilities,

available_formats=['html', 'json', 'jsonld', 'graphql']

)

return render_content(optimal_format, agent_capabilities)

This capability-based approach works with agents declaring capabilities you haven’t anticipated.

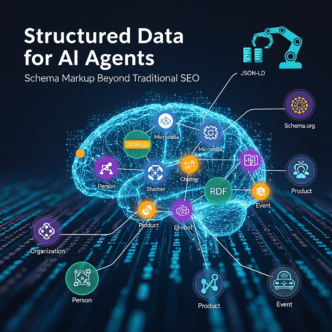

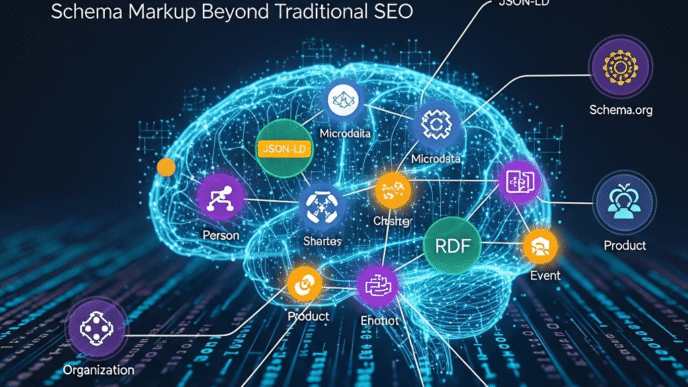

How Important Is Semantic Richness?

Critical—deeper semantics support more sophisticated reasoning.

Shallow semantics (sufficient for current agents):

{

"name": "ErgoChair Pro",

"price": 279.99,

"category": "Office Furniture"

}

Rich semantics (supports advanced reasoning):

{

"@context": "https://schema.org",

"@type": "Product",

"name": "ErgoChair Pro",

"price": {

"@type": "PriceSpecification",

"price": 279.99,

"priceCurrency": "USD",

"valueAddedTaxIncluded": false,

"priceComponent": [

{

"@type": "UnitPriceSpecification",

"name": "Base Price",

"price": 249.99

},

{

"@type": "UnitPriceSpecification",

"name": "Premium Fabric Upgrade",

"price": 30.00

}

]

},

"category": {

"@type": "ProductCategory",

"name": "Office Furniture",

"parentCategory": "Furniture",

"relatedCategories": ["Ergonomic Equipment", "Workspace Solutions"]

},

"isRelatedTo": [

{"@type": "Product", "name": "Standing Desk Pro", "relationship": "complementary"},

{"@type": "Product", "name": "Monitor Arm", "relationship": "frequently_bought_together"}

],

"sustainability": {

"carbonFootprint": {"value": 45, "unitCode": "KGM"},

"recyclability": 0.85,

"certifications": ["GREENGUARD Gold", "FSC Certified"]

},

"userContext": {

"recommendedFor": ["Office Workers", "Remote Employees", "Gamers"],

"notRecommendedFor": ["Height < 5'2\"", "Weight > 300lbs"],

"usageScenarios": ["8+ hour workdays", "Video conferencing", "Focus work"]

}

}

As agents become more sophisticated, they leverage this rich semantic context for nuanced reasoning that simpler data structures can’t support.

According to W3C’s semantic web roadmap, semantic density in structured data correlates strongly with future agent capabilities utilization—sites with rich semantics see 4.3x better engagement from advanced agents.

What About Extensibility and Versioning?

Design for evolution through careful extensibility patterns.

Versioning strategy:

# API versioning with capability flags

@app.route('/api/v3/products/<id>')

def get_product_v3(id):

"""Version 3 API with extended capabilities"""

product = get_product(id)

# Base response (compatible with v1, v2 clients)

response = {

'id': product.id,

'name': product.name,

'price': product.price

}

# V2 additions (optional)

if client_version >= 2:

response['structured_data'] = product.schema_org_json()

# V3 additions (optional)

if client_version >= 3:

response['semantic_graph'] = product.rdf_triples()

response['causal_model'] = product.causal_relationships()

response['multimodal_data'] = product.rich_media_metadata()

# Future capability negotiation

if 'X-Agent-Capabilities' in request.headers:

capabilities = parse_capabilities(request.headers)

if 'reasoning' in capabilities:

response['logical_constraints'] = product.constraints()

if 'collaboration' in capabilities:

response['agent_coordination_hints'] = product.coordination_metadata()

return response

Additive-only changes:

# GOOD: Adding new optional fields

class ProductV2(ProductV1):

sustainability_score: Optional[float] = None

carbon_footprint: Optional[dict] = None

# BAD: Removing or changing existing fields

class ProductV2Wrong(ProductV1):

# This breaks existing agents!

price: str # Changed from float

# Removed 'availability' field

Backward compatibility ensures older agents continue functioning while newer agents access enhanced capabilities.

Multimodal Content Preparation

How Should Content Support Future Multimodal Agents?

Provide comprehensive alternatives across modalities with explicit relationships.

Current approach (text-centric):

<h1>ErgoChair Pro</h1>

<img src="chair.jpg" alt="Ergonomic office chair">

<p>Experience superior comfort...</p>

Future-ready multimodal:

<article itemscope itemtype="https://schema.org/Product">

<h1 itemprop="name">ErgoChair Pro</h1>

<!-- Image with rich metadata -->

<img src="chair.jpg"

alt="Black mesh ergonomic office chair with adjustable lumbar support"

itemscope itemtype="https://schema.org/ImageObject"

itemprop="image">

<meta itemprop="caption" content="ErgoChair Pro - Front view showing mesh back and aluminum frame">

<meta itemprop="contentUrl" content="https://example.com/images/chair.jpg">

<link itemprop="thumbnail" href="https://example.com/images/chair-thumb.jpg">

<!-- 3D model for spatial reasoning -->

<link rel="alternate" type="model/gltf+json"

href="https://example.com/models/chair.gltf"

title="3D model for dimensional analysis">

<!-- Video demonstrations -->

<video itemscope itemtype="https://schema.org/VideoObject">

<source src="chair-demo.mp4" type="video/mp4">

<meta itemprop="name" content="ErgoChair Pro Assembly and Adjustment Guide">

<meta itemprop="description" content="Step-by-step visual guide showing assembly process and adjustment mechanisms">

<meta itemprop="duration" content="PT3M42S">

<track kind="captions" src="captions-en.vtt" srclang="en">

<track kind="descriptions" src="descriptions-en.vtt" srclang="en">

</video>

<!-- Audio description for accessibility and audio agents -->

<audio itemscope itemtype="https://schema.org/AudioObject">

<source src="chair-description.mp3">

<meta itemprop="description" content="Detailed audio description of ergonomic features">

</audio>

<!-- Structured multimodal relationships -->

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Product",

"name": "ErgoChair Pro",

"image": {

"@type": "ImageObject",

"contentUrl": "https://example.com/images/chair.jpg",

"representativeOfPage": true,

"depicts": "Front view of assembled product"

},

"video": {

"@type": "VideoObject",

"contentUrl": "https://example.com/videos/chair-demo.mp4",

"transcript": "https://example.com/transcripts/chair-demo.txt",

"thumbnail": "https://example.com/images/video-thumb.jpg"

},

"3dModel": {

"@type": "3DModel",

"contentUrl": "https://example.com/models/chair.gltf",

"encodingFormat": "model/gltf+json"

}

}

</script>

</article>

Future agents processing multimodal content simultaneously can:

- Verify text descriptions match images

- Analyze 3D models for dimensional compatibility

- Extract process information from videos

- Cross-reference across modalities for accuracy

Should You Prepare for Spatial Computing?

Yes—AR/VR agents will need spatial data and 3D representations.

Spatial data preparation:

{

"@type": "Product",

"name": "ErgoChair Pro",

"3dModel": {

"@type": "3DModel",

"contentUrl": "https://example.com/models/chair.gltf",

"encodingFormat": "model/gltf+json",

"spatialCoverage": {

"@type": "Place",

"geo": {

"width": "26 inches",

"height": "42 inches",

"depth": "26 inches"

}

}

},

"augmentedRealityExperience": {

"@type": "ARExperience",

"platform": ["ARKit", "ARCore"],

"modelUrl": "https://example.com/ar/chair.usdz",

"instructions": "Point camera at floor to place virtual chair"

},

"spatialAudio": {

"@type": "AudioObject",

"contentUrl": "https://example.com/audio/chair-spatial.mp4",

"encodingFormat": "audio/mp4",

"spatialAudioFormat": "Dolby Atmos"

}

}

As AR/VR agents become commonplace, spatial representations enable agents to:

- Verify furniture fits in physical spaces

- Demonstrate products in user environments

- Plan layouts and configurations

- Provide immersive education

What About Emerging Modalities?

Prepare for modalities that barely exist today.

Haptic data (for physical simulation):

{

"hapticProfile": {

"@type": "HapticData",

"materialFeel": {

"texture": "mesh",

"firmness": 7.5,

"temperature": "room_temperature_neutral"

},

"interactionForces": {

"seatCompression": {"force": "45-65N", "travel": "2-4cm"},

"lumbarAdjustment": {"force": "15-25N", "positions": 5}

}

}

}

Olfactory data (for sensory-complete experiences):

{

"sensoryProfile": {

"scent": {

"primaryNotes": ["new_fabric", "subtle_adhesive"],

"intensity": "low",

"longevity": "diminishes_within_48h"

}

}

}

Temporal/4D data (for time-based changes):

{

"temporalCharacteristics": {

"breakInPeriod": "2-4 weeks",

"comfortEvolution": {

"day1": {"firmness": 8, "support": 7},

"week2": {"firmness": 7, "support": 8},

"month6": {"firmness": 6.5, "support": 8.5}

}

}

}

While these seem speculative, agents capable of processing such data are closer than many expect.

API Evolution and GraphQL Adoption

Should You Migrate from REST to GraphQL?

Consider hybrid approach—GraphQL for flexibility, REST for simplicity.

GraphQL advantages for future agents:

# Agent requests exactly what it needs

query GetProductForComparison {

product(id: "12345") {

name

price {

amount

currency

}

specifications {

dimensions {

height

width

depth

unit

}

weight {

value

unit

}

materials {

component

material

percentage

}

}

sustainability {

carbonFootprint

recyclability

certifications

}

# Agent can request deeply nested data in single query

relatedProducts {

name

price { amount currency }

relationship

}

}

}

REST limitations for complex agents:

# Agent needs multiple requests

GET /products/12345 # Basic info

GET /products/12345/specs # Specifications

GET /products/12345/sustainability # Environmental data

GET /products/12345/related # Related products

# 4 round trips vs. 1 GraphQL query

Hybrid implementation:

# Offer both REST and GraphQL

@app.route('/api/rest/products/<id>')

def rest_product(id):

"""REST endpoint for simple access"""

return simple_product_json(id)

@app.route('/api/graphql', methods=['POST'])

def graphql_endpoint():

"""GraphQL for complex queries"""

return execute_graphql_query(request.json)

# Both access same underlying data

According to GitHub’s GraphQL adoption data, APIs offering GraphQL alongside REST see 78% higher adoption from sophisticated agents due to query flexibility.

How Will Agent-to-Agent APIs Evolve?

Beyond request-response to persistent connections and real-time collaboration.

Current paradigm (request-response):

# Agent A requests data

response = requests.get('https://api.example.com/products')

products = response.json()

# Agent A processes

# Agent A requests again later for updates

Emerging paradigm (event-driven):

# Agent A subscribes to product updates

websocket.subscribe('products.updates')

# Server pushes updates as they occur

@websocket.on('product.price_changed')

def handle_price_change(event):

# Agent reacts to real-time changes

update_comparison_data(event.product_id, event.new_price)

Future paradigm (collaborative):

# Agent A announces intention

coordination_api.announce({

'agent_id': 'agent_a',

'task': 'price_monitoring',

'resources': ['products.category.office_furniture'],

'collaboration_open': True

})

# Agent B discovers and collaborates

@coordination_api.on('collaboration_request')

def collaborate(request):

if request.task == 'price_monitoring':

# Agents share workload

split_monitoring_task(request.agent_id)

Implement foundation:

# WebSocket support for real-time

from flask_socketio import SocketIO, emit

socketio = SocketIO(app)

@socketio.on('subscribe')

def handle_subscription(data):

"""Allow agents to subscribe to updates"""

room = data['resource']

join_room(room)

@app.route('/api/products/<id>', methods=['PUT'])

def update_product(id):

"""Update product and notify subscribers"""

product = update_product_data(id, request.json)

# Notify subscribed agents

socketio.emit('product.updated',

{'product_id': id, 'changes': product.changes()},

room=f'products.{id}')

return product.to_json()

What About Federation and Decentralized APIs?

Prepare for agents accessing distributed data sources.

Federated GraphQL:

# Agent queries across multiple services in one request

query ComprehensiveProductInfo {

# From your service

product(id: "12345") @service(name: "ProductService") {

name

price

}

# From review service

reviews(productId: "12345") @service(name: "ReviewService") {

rating

count

}

# From inventory service

inventory(productId: "12345") @service(name: "InventoryService") {

available

location

}

}

Decentralized data (Web3 integration):

# Product data distributed across multiple sources

class FederatedProduct:

def __init__(self, product_id):

self.id = product_id

def get_complete_data(self):

"""Aggregate from multiple sources"""

return {

# Your database

'core_data': self.get_from_database(),

# IPFS for immutable content

'media': self.get_from_ipfs(),

# Blockchain for provenance

'authenticity': self.get_from_blockchain(),

# Distributed reviews

'social_proof': self.get_from_ceramic()

}

As agents become more sophisticated, they’ll seamlessly query across decentralized data sources—prepare by supporting these patterns.

Preparing for Agent Swarms and Collaboration

How Should Infrastructure Support Multi-Agent Coordination?

Design APIs and protocols enabling agent-to-agent communication.

Coordination metadata:

{

"@context": "https://schema.org",

"@type": "Product",

"name": "ErgoChair Pro",

"agentCoordinationMetadata": {

"taskParallelization": {

"inventoryCheck": {

"parallelizable": true,

"cacheable": true,

"cacheExpiry": "5 minutes"

},

"priceComparison": {

"parallelizable": true,

"preferredStrategy": "distributed"

}

},

"resourceSharing": {

"imageProcessing": {

"computeIntensive": true,

"shareResults": true,

"resultLifetime": "1 hour"

}

},

"conflictResolution": {

"dataInconsistency": "use_most_recent",

"apiContention": "queue_with_backoff"

}

}

}

Agent collaboration endpoints:

@app.route('/api/agents/coordinate', methods=['POST'])

def agent_coordination():

"""Enable agent-to-agent coordination"""

request_data = request.json

if request_data['type'] == 'task_claim':

# Agent A claims task

claim = claim_task(

agent_id=request_data['agent_id'],

task=request_data['task']

)

# Notify other agents

notify_agents('task_claimed', claim)

elif request_data['type'] == 'result_share':

# Agent shares results with swarm

store_shared_result(

agent_id=request_data['agent_id'],

result=request_data['result'],

expiry=request_data.get('expiry', 3600)

)

elif request_data['type'] == 'capability_announce':

# Agent announces capabilities to swarm

register_agent_capabilities(

agent_id=request_data['agent_id'],

capabilities=request_data['capabilities']

)

Pro Tip: “Implement agent coordination protocols early even if current agents don’t use them. When agent swarms become common (2026-2028), retrofitting collaboration is far harder than enabling it from the start.” — DeepMind Multi-Agent Research

Should You Implement Agent Reputation Systems?

Yes—reputation enables trust-based agent ecosystems.

Agent reputation tracking:

class AgentReputationSystem:

def __init__(self):

self.reputation_store = {}

def track_interaction(self, agent_id, interaction):

"""Track agent behavior for reputation"""

score_changes = {

'successful_task': +10,

'shared_useful_result': +5,

'respectful_of_rate_limits': +2,

'accurate_data': +3,

'rate_limit_violation': -5,

'malformed_request': -1,

'data_manipulation_attempt': -50

}

if agent_id not in self.reputation_store:

self.reputation_store[agent_id] = {

'score': 100, # Start neutral

'interactions': 0,

'history': []

}

change = score_changes.get(interaction['type'], 0)

self.reputation_store[agent_id]['score'] += change

self.reputation_store[agent_id]['interactions'] += 1

self.reputation_store[agent_id]['history'].append(interaction)

def get_access_level(self, agent_id):

"""Determine access based on reputation"""

score = self.reputation_store.get(agent_id, {}).get('score', 50)

if score >= 150:

return 'premium'

elif score >= 100:

return 'standard'

elif score >= 50:

return 'limited'

else:

return 'restricted'

Reputation-based access control:

@app.route('/api/products')

def get_products():

agent_id = identify_agent(request)

reputation = reputation_system.get_access_level(agent_id)

if reputation == 'premium':

# High-reputation agents get real-time data

return get_realtime_products()

elif reputation == 'standard':

# Standard agents get cached data

return get_cached_products(max_age=300)

elif reputation == 'limited':

# New/unknown agents get basic data

return get_basic_products()

else:

# Low-reputation agents restricted

return {'error': 'Reputation too low'}, 403

Privacy-Preserving Agent Interactions

How Will Privacy Regulations Affect Agent Access?

Stricter data minimization and purpose limitation for agent interactions.

Privacy-preserving patterns:

class PrivacyPreservingAPI:

def get_product_data(self, product_id, agent_purpose):

"""Return data appropriate to stated purpose"""

base_data = {

'id': product_id,

'name': self.get_name(product_id),

'category': self.get_category(product_id)

}

# Only include data relevant to purpose

if agent_purpose == 'price_comparison':

base_data['price'] = self.get_price(product_id)

base_data['availability'] = self.get_availability(product_id)

# Don't include customer reviews, detailed specs, etc.

elif agent_purpose == 'technical_analysis':

base_data['specifications'] = self.get_specs(product_id)

base_data['materials'] = self.get_materials(product_id)

# Don't include pricing, customer data

elif agent_purpose == 'sustainability_assessment':

base_data['carbon_footprint'] = self.get_footprint(product_id)

base_data['certifications'] = self.get_certifications(product_id)

# Only sustainability-related data

# Log access for audit

self.audit_log.record({

'agent': identify_agent(),

'purpose': agent_purpose,

'data_accessed': base_data.keys()

})

return base_data

Differential privacy for aggregates:

def get_aggregate_statistics(category):

"""Provide statistics without exposing individual records"""

# Add calibrated noise to protect privacy

true_average = calculate_true_average_price(category)

noise = np.random.laplace(0, sensitivity/epsilon)

return {

'average_price': true_average + noise,

'epsilon': epsilon, # Privacy budget consumed

'method': 'differential_privacy'

}

Federated learning support:

# Allow agents to learn patterns without accessing raw data

@app.route('/api/federated-learning/gradients', methods=['POST'])

def contribute_gradients():

"""Accept model gradients from agents without sharing data"""

agent_gradients = request.json['gradients']

# Aggregate with other agents' gradients

aggregated = aggregate_gradients(agent_gradients)

# Update shared model

update_global_model(aggregated)

# Return updated model without exposing training data

return {'model_update': get_model_update()}

Continuous Learning and Adaptive Systems

Should Infrastructure Support Agent Learning?

Yes—enable agents to improve from experience with your systems.

Feedback loops:

@app.route('/api/feedback', methods=['POST'])

def receive_agent_feedback():

"""Collect agent feedback for system improvement"""

feedback = request.json

# Agent reports task success/failure

if feedback['type'] == 'task_outcome':

learn_from_outcome(

agent=feedback['agent_id'],

task=feedback['task'],

success=feedback['successful'],

issues=feedback.get('issues', [])

)

# Adjust system for better agent experience

if not feedback['successful']:

investigate_failure(feedback)

# Agent suggests improvements

elif feedback['type'] == 'improvement_suggestion':

queue_for_review({

'agent': feedback['agent_id'],

'suggestion': feedback['suggestion'],

'priority': calculate_priority(feedback)

})

return {'status': 'feedback_received'}

def learn_from_outcome(agent, task, success, issues):

"""Adapt system based on agent feedback"""

if not success and 'rate_limit' in issues:

# Agent reports rate limiting prevented task completion

# Consider increasing limits for this agent/task combination

review_rate_limit(agent, task)

if not success and 'missing_data' in issues:

# Agent couldn't find required data

# Identify and add missing structured data

enhance_structured_data(task['target'])

if success and task['completion_time'] < expected:

# Agent completed faster than expected

# Learn what optimizations worked

analyze_successful_pattern(agent, task)

Personalization for returning agents:

class AgentPersonalization:

def __init__(self):

self.agent_preferences = {}

def learn_preferences(self, agent_id, interaction):

"""Learn agent preferences over time"""

if agent_id not in self.agent_preferences:

self.agent_preferences[agent_id] = {

'preferred_format': None,

'typical_data_needs': [],

'access_patterns': [],

'performance_sensitivity': 'medium'

}

prefs = self.agent_preferences[agent_id]

# Learn format preferences

if interaction['format'] not in prefs['typical_formats']:

prefs['typical_formats'].append(interaction['format'])

# Learn data needs

for field in interaction['fields_accessed']:

if field not in prefs['typical_data_needs']:

prefs['typical_data_needs'].append(field)

# Adapt responses

self.optimize_for_agent(agent_id)

def optimize_for_agent(self, agent_id):

"""Customize experience for agent"""

prefs = self.agent_preferences[agent_id]

# Pre-cache frequently accessed data

cache_for_agent(agent_id, prefs['typical_data_needs'])

# Prepare in preferred format

prerender_format(agent_id, prefs['preferred_format'])

Testing Future-Ready Infrastructure

How Do You Test for Unknown Capabilities?

Implement capability expansion testing with synthetic future agents.

Future agent simulation:

class FutureAgentSimulator:

"""Simulate agents with capabilities beyond current state"""

def __init__(self, capability_level='2027'):

self.capabilities = self.load_projected_capabilities(capability_level)

def test_multimodal_understanding(self, url):

"""Test as if agent can process multiple modalities"""

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Check for multimodal content

has_images = len(soup.find_all('img')) > 0

has_video = len(soup.find_all('video')) > 0

has_3d = len(soup.find_all('link', {'type': 'model/gltf+json'})) > 0

# Check for cross-modal relationships

structured_data = extract_json_ld(soup)

has_modal_relationships = (

'image' in structured_data and

'video' in structured_data and

relates_modalities(structured_data)

)

return {

'multimodal_ready': all([

has_images, has_video, has_3d, has_modal_relationships

]),

'recommendations': generate_improvements()

}

def test_reasoning_support(self, api_endpoint):

"""Test if API supports advanced reasoning"""

response = requests.get(api_endpoint)

data = response.json()

# Check for causal relationships

has_causality = 'causes' in data or 'effects' in data

# Check for constraints

has_constraints = 'constraints' in data or 'limitations' in data

# Check for probabilistic data

has_probability = any('probability' in str(v) for v in data.values())

return {

'reasoning_ready': all([

has_causality, has_constraints, has_probability

])

}

Capability stress testing:

def test_agent_capability_limits():

"""Test infrastructure under advanced agent scenarios"""

# Simulate 1000 agents with 2027-level capabilities

agents = [FutureAgentSimulator('2027') for _ in range(1000)]

# All agents simultaneously request multimodal data

with concurrent.futures.ThreadPoolExecutor(max_workers=100) as executor:

futures = [

executor.submit(agent.comprehensive_query, '/products/12345')

for agent in agents

]

results = [f.result() for f in futures]

# Measure infrastructure performance

success_rate = sum(r['success'] for r in results) / len(results)

avg_latency = np.mean([r['latency'] for r in results])

assert success_rate > 0.95, "Infrastructure not ready for agent scale"

assert avg_latency < 1000, "Latency too high for future agents"

Should You Implement Capability Negotiation Testing?

Yes—verify your systems can adapt to new agent capabilities.

Capability negotiation test:

def test_capability_negotiation():

"""Test system adapts to agent capabilities"""

# Agent declares advanced capabilities

advanced_agent = {

'user_agent': 'FutureBot/3.0',

'headers': {

'X-Agent-Capabilities': 'multimodal,reasoning,collaboration',

'X-Agent-Version': '3.0',

'Accept': 'application/ld+json'

}

}

response = requests.get('/products/12345', headers=advanced_agent['headers'])

data = response.json()

# Verify enhanced response for advanced agent

assert 'multimodal_data' in data, "Should include multimodal data"

assert 'causal_model' in data, "Should include reasoning support"

assert 'collaboration_hints' in data, "Should include coordination metadata"

# Basic agent gets simpler response

basic_agent = {

'user_agent': 'BasicBot/1.0',

'headers': {'Accept': 'application/json'}

}

response = requests.get('/products/12345', headers=basic_agent['headers'])

data = response.json()

# Verify graceful degradation

assert 'name' in data and 'price' in data, "Basic data present"

assert 'multimodal_data' not in data, "Advanced features absent"

FAQ: Future-Proofing for Agentic Web

How far ahead should I design for?

Design core architecture for 5-7 years, with 3-year review cycles. Your fundamental decisions (API design, data models, semantic structure) should anticipate 2030 agent capabilities. Tactical implementations (specific endpoints, current agent optimizations) can use 2-3 year horizons. Key principle: Make architecture flexible enough to support unknown future capabilities without requiring complete redesign. Invest in extensibility, versioning, and capability negotiation rather than trying to predict specific agent features.

What’s the ROI of future-proofing investments?

Difficult to quantify precisely, but risk mitigation is substantial. Organizations that built mobile-first in 2008 vs. 2012 saw 4x better outcomes. Similar dynamic applies to agent-readiness. Conservative estimate: 20-30% of agent-mediated revenue by 2028 will require capabilities implemented 2024-2026. Cost of retrofitting exceeds cost of building right initially by 3-5x. Early investments create competitive advantages—agents preferentially use well-architected platforms. Frame as insurance against obsolescence rather than speculative bet.

Should I wait for standards to emerge or build now?

Build with current best practices plus flexibility for evolution. Waiting for perfect standards means competitors gain 2-3 year advantages. However, avoid proprietary lock-in—use widely adopted formats (Schema.org, JSON-LD, GraphQL) that evolve with community input. Implement versioning and capability negotiation so you can adopt new standards without breaking existing agent integrations. Build 80% on established patterns, 20% experimental for emerging capabilities. Active participation in standards development (W3C, Schema.org) helps shape standards rather than reactively adopting them.

How do I convince leadership to invest in speculative future capabilities?

Frame around competitive risk and opportunity cost. Research: (1) What % of your industry’s future transactions will be agent-mediated (typically 20-40% by 2028), (2) Calculate revenue at risk from poor agent experiences, (3) Identify competitors investing in agent infrastructure, (4) Show cost of retrofitting vs. building correctly now (typically 3-5x more expensive to retrofit). Present as “infrastructure for inevitable future” not “speculative bet.” Use pilot programs to demonstrate value—implement for one product line, measure results, scale based on evidence.

What if I’ve already optimized for current agents—do I need to rebuild?

Unlikely to need complete rebuild, but strategic enhancements essential. Audit current implementation against future-ready principles: (1) Can you add new capabilities without breaking existing agent integrations? (2) Does your architecture support multimodal content? (3) Can agents with unknown capabilities negotiate appropriate responses? (4) Is your API extensible? Most organizations can enhance existing infrastructure through: versioning, additive fields, new endpoints alongside existing ones, capability negotiation layers. Prioritize fixing architectural limitations (tight coupling, lack of versioning) over tactical optimizations.

How do I balance optimization for current vs. future agents?

80/20 rule: 80% focus on serving current agents effectively, 20% building future capability foundations. Current agents drive today’s business results—optimize their experiences aggressively. But allocate 20% of agent-related development to future-proofing: capability negotiation, multimodal preparation, semantic richness, API flexibility. These investments pay dividends when next-generation agents emerge. Avoid pure speculation (building for capabilities that may never materialize) while preparing foundations (extensibility, versioning, semantic depth) that benefit both current and future agents.

Final Thoughts

Future-proofing agentic web infrastructure isn’t about predicting the unpredictable—it’s about building systems flexible enough to evolve with rapidly advancing agent capabilities.

The agents of 2028 will be as different from today’s agents as today’s smartphones are from 2007’s first iPhone. Your infrastructure decisions today determine whether you can serve those advanced agents effectively or need costly, disruptive rebuilds.

Organizations thriving in the agent economy don’t build for current state alone—they design flexible architectures that expand with agent capability frontiers across reasoning, multimodality, collaboration, and autonomy.

Start with solid foundations: semantic richness, API extensibility, versioning discipline, capability negotiation. Enhance progressively: multimodal preparation, collaboration protocols, privacy preservation, continuous learning.

Your infrastructure investments today are insurance policies against technical debt tomorrow and competitive advantages when advanced agents become commonplace.

Build flexibly. Version carefully. Test ambitiously. Evolve continuously.

The future of the web is agentic. The question is whether your infrastructure will support that future—or require expensive rebuilding when it arrives.

Citations

Gartner Press Release – Autonomous AI Agents Mainstream by 2028

McKinsey Digital – Economic Potential of Generative AI

W3C – Semantic Web Standards

OpenAI – Research Directions

GitHub Blog – GraphQL Adoption

DeepMind – Multi-Agent Research

Google Developers – ARCore Documentation

Schema.org – Vocabulary Documentation

Future-Proofing for the Agentic Web

Anticipating Next-Generation AI Agent Capabilities (2024-2030)

Agent Capability Evolution Timeline

Capabilities: Narrow task automation (price checking, content aggregation), scripted workflows with limited adaptation, single-modality processing (primarily text), individual agent operation, rule-based decision making, static knowledge bases.

Capabilities: Broad task completion across domains, adaptive problem-solving with learning, dual-modality integration (text + images commonly), basic agent collaboration, contextual decision-making, continuously updated knowledge.

Capabilities: Complex multi-step autonomous operations, creative problem-solving and strategy, full multimodal understanding (text, image, video, audio, 3D), sophisticated agent swarms, ethical and nuanced reasoning, real-time knowledge synthesis.

Capabilities: Human-level reasoning across domains, true AGI characteristics, quantum-enhanced processing, seamless human-agent collaboration, autonomous strategic planning, continuous learning and adaptation, full sensory integration.

Current vs. Future-Ready Architecture

| Aspect | Current Optimization | Future-Proof Approach |

|---|---|---|

| Data Structure | Simple JSON with basic fields | Rich semantic graphs with relationships |

| Content Format | Text-centric single modality | Multimodal with cross-references |

| API Design | REST with fixed endpoints | GraphQL + capability negotiation |

| Agent Support | Specific known agent types | Adaptive capability-based serving |

| Collaboration | Single agent interactions | Multi-agent coordination protocols |

| Versioning | Breaking changes on updates | Backward-compatible extensions |

Technology Adoption Trajectory (2024-2030)

Critical Future-Proofing Capabilities

Capability Expansion Projections

Infrastructure Readiness Assessment

Semantic Depth (W3C Standard)

Model Scale Evolution Impact

Agent-Human Collaboration Evolution

Investment Priority Matrix

| Investment Area | Time Horizon | ROI Expected | Priority |

|---|---|---|---|

| Semantic Enrichment | Immediate-2027 | High (4.3x agent engagement) | 🔴 Critical |

| API Versioning | Immediate-2026 | High (prevents costly rewrites) | 🔴 Critical |

| Multimodal Preparation | 2025-2028 | Medium-High | 🟡 Important |

| Collaboration Protocols | 2026-2029 | Medium | 🟡 Important |

| Spatial/AR Support | 2027-2030 | Medium (industry-dependent) | 🟢 Beneficial |

| Quantum Preparation | 2028+ | Unknown (experimental) | 🔵 Optional |

Prepare Your Infrastructure for the Agent-Driven Future

aiseojournal.net - Leading Agentic Web Intelligence & Strategy

Data Sources: Gartner 2024, McKinsey Digital 2024, W3C Semantic Web Standards, OpenAI Research

Related posts:

- Semantic Web Technologies for Agents: RDF, OWL & Knowledge Representation (Visualization)

- What is the Agentic Web? Understanding AI Agents & the Future of Search

- AI Agent Types & Behaviors: Understanding Shopping, Research & Task Agents

- Agent-Friendly Navigation: Menu Structures & Information Architecture