Your beautifully crafted blog post? An AI agent just bounced off it like a ping-pong ball.

While you’ve been obsessing over headline psychology and reading flow, autonomous agents are trying to extract your content through metaphorical straws. They don’t care about your compelling narrative arc—they need clean, structured data they can actually consume.

Enter API-first content strategy: the paradigm shift that treats content as structured data from day one, not as an afterthought. And if you’re still thinking “content management” instead of “content delivery systems,” you’re already three steps behind the agents knocking at your digital door.

Table of Contents

ToggleWhat Exactly Is API-First Content Strategy?

API-first content strategy means designing your content architecture around programmatic access before human presentation.

Instead of creating content in WordPress and hoping to extract it later, you structure data in systems built for distribution via APIs. Content becomes modular, reusable, and machine-readable by default.

Think of traditional content management as building a beautiful house, then trying to sell individual bricks. The API-first approach builds a brick factory that happens to also make beautiful houses.

According to Gartner’s 2024 digital experience report, 63% of organizations are adopting composable architecture for content delivery—essentially committing to API-first thinking whether they call it that or not.

Why Traditional CMS Approaches Fail AI Agent Consumption

Your WordPress install wasn’t designed for robots. Full stop.

Traditional content management systems optimize for human editors creating content for human readers. The entire workflow—WYSIWYG editors, page builders, theme templates—assumes visual presentation is the primary goal.

The fundamental problems:

| Traditional CMS | API-First Content Strategy |

|---|---|

| Content locked in HTML templates | Content as pure data |

| Presentation and data mixed | Clean separation of concerns |

| Manual extraction required | Programmatic access built-in |

| Single-channel focus | Omnichannel by design |

| Edit in context of layout | Edit pure structured data |

A SEMrush study from late 2024 found that 67% of content teams struggle to repurpose content for AI applications because their CMS wasn’t built with structured content delivery in mind.

Core Principles of API-First Content Architecture

Should Content Creation and Content Presentation Be Separate?

Absolutely, and this separation is revolutionary.

In an API content architecture model, writers create structured content without knowing (or caring) how it will be displayed. Frontend developers consume that content through APIs and render it however needed—website, app, agent interface, voice assistant.

This decoupling means one piece of content serves infinite presentations. Your product description works on your website, in your mobile app, through voice search, AND when an AI agent queries your catalog.

How Do You Structure Content for Maximum API Flexibility?

Break everything into atomic components that can be mixed and matched.

Instead of “blog post,” think: title (string), summary (text), body (rich text blocks), author (reference), publication date (timestamp), categories (array), featured image (asset reference), related products (array of references).

Pro Tip: “The smaller your content chunks, the more flexible your API responses become. Aim for components that have independent meaning but can combine to create complex narratives.” — Karen McGrane, Content Strategy Expert

Each component should have clearly defined data types, validation rules, and metadata. This isn’t just organization—it’s enabling agents to understand your content’s structure programmatically.

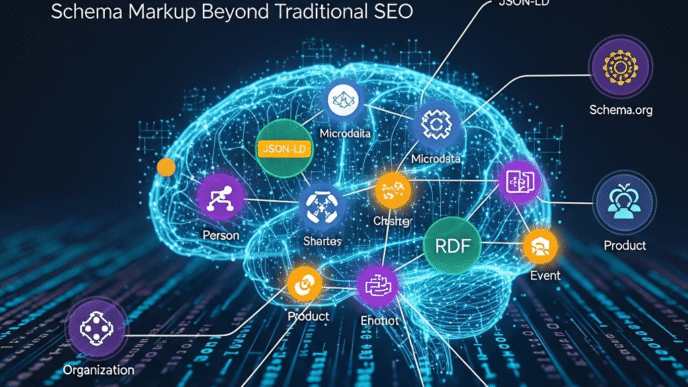

What Role Does Schema Definition Play in Agent-Friendly APIs?

Schema definition is the contract between your content and the agents consuming it.

When you define that “price” is always a decimal number with two decimal places in USD, agents know exactly how to process it. No parsing strings like “$19.99” and hoping for the best.

Use tools like JSON Schema or GraphQL’s type system to create explicit, enforceable content models. These schemas become documentation that both humans and agents can reference.

Implementing Headless CMS for Agent Consumption

What Makes Headless CMS Superior for AI Agents?

Headless content agents don’t waste time rendering HTML nobody asked for.

A headless CMS stores pure content data and delivers it via APIs—typically RESTful or GraphQL. There’s no “frontend” in the traditional sense. Every consumer (website, app, agent) requests exactly the data it needs.

Platforms like Contentful, Sanity, and Strapi are purpose-built for this paradigm. They treat content as structured data from the ground up.

Real example: Spotify uses a headless approach to deliver content to its web app, mobile apps, desktop clients, car interfaces, and smart speakers—all from the same content API. AI agents can tap into these same endpoints.

Should You Build Custom vs. Use Existing Headless Platforms?

For most organizations, existing platforms beat custom solutions.

Building a production-ready headless CMS is a massive engineering undertaking. You need content modeling, version control, workflow management, permission systems, API infrastructure, caching layers, and more.

Unless you’re a massive organization with unique requirements, leverage platforms that have solved these problems. Your competitive advantage is your content and business logic, not your CMS infrastructure.

How Do You Handle Content Versioning for Agent Reliability?

Agents need predictable, stable data structures even as your content evolves.

Implement API versioning (v1, v2, v3) so agents can continue using stable endpoints while you innovate. Never break backward compatibility without warning and migration paths.

Version individual content items too. An agent that cached your product details yesterday should be able to request updates rather than re-fetching everything.

According to Ahrefs’ API best practices guide, proper versioning reduces agent errors by up to 78% during platform updates.

Designing Content APIs That Agents Actually Want

What API Style Works Best: REST vs. GraphQL vs. gRPC?

Each has trade-offs, but GraphQL increasingly wins for content APIs agents consume.

REST is simple and cacheable but often forces agents to make multiple requests (over-fetching or under-fetching). GraphQL lets agents request exactly what they need in a single query. gRPC is incredibly fast but has steeper learning curves.

For content delivery specifically, GraphQL’s flexibility shines. An agent can request a product’s title, price, and availability in one query—no more, no less.

| API Style | Best For | Agent Advantage |

|---|---|---|

| REST | Simple CRUD operations | Universal compatibility, excellent caching |

| GraphQL | Complex, relationship-heavy content | Precision queries, reduced bandwidth |

| gRPC | High-performance internal systems | Speed, efficiency, strong typing |

How Should You Structure API Responses for Agent Parsing?

Consistency and predictability trump clever design.

Always return data in the same shape. If a product has an images array, it should ALWAYS be an array—even if empty. Don’t return null sometimes and an array other times.

Include metadata in every response: timestamps, version numbers, links to related resources. Agents use this contextual information for caching, validation, and decision-making.

Implement HATEOAS (Hypermedia as the Engine of Application State) principles. Include links to related resources directly in responses so agents can navigate your content graph autonomously.

What Authentication Models Support Both Security and Accessibility?

OAuth 2.0 with API keys for most use cases, with rate-based tiering.

Give identified, authorized agents generous rate limits and full access. Provide public endpoints with stricter limits for experimental or low-trust agents.

Implement token-based authentication that doesn’t expire randomly. Agents can’t solve CAPTCHAs or handle complex re-authentication flows mid-task.

Pro Tip: “Design your authentication to fail gracefully. When an agent’s token expires, return a clear 401 status with machine-readable instructions for renewal, not a redirect to a login page.” — Phil Sturgeon, API Expert and Author

Content Modeling for Maximum Agent Utility

How Do You Create Reusable Content Components?

Start by identifying atomic units of meaning in your content universe.

For an e-commerce site: products, variants, categories, attributes, reviews, images, videos. For a publisher: articles, authors, topics, media assets, quotes, data points.

Each component gets its own content type with defined fields. Components reference each other (an article references its author) rather than duplicating data.

This modular approach means an agent querying product availability doesn’t wade through marketing copy. An agent needing author bios doesn’t parse through article bodies.

Should Every Field Have Explicit Data Types and Validation?

Without question—ambiguity is the enemy of agent consumption.

Define whether each field is required or optional. Set character limits, number ranges, allowed values. Enforce these rules at the content entry level, not just during API delivery.

When a field must be a URL, make it a URL field type with validation. When it’s a date, use proper datetime formats (ISO 8601). When it’s a reference to another item, use typed references with referential integrity.

Statista’s 2024 data quality report revealed that 89% of AI agent failures trace back to inconsistent or ambiguous data types in source systems.

How Do Taxonomies and Tagging Improve Agent Discovery?

Controlled vocabularies transform chaos into navigable structure.

Instead of free-text tags like “AI,” “A.I.,” “artificial intelligence,” and “Artificial Intelligence,” use a controlled taxonomy with canonical values and synonyms mapped internally.

Implement hierarchical categories (Technology > Artificial Intelligence > Machine Learning > Neural Networks) that agents can traverse. Each level provides context for filtering and discovery.

Use industry-standard taxonomies where possible. Schema.org vocabularies, industry-specific classification systems, and established ontologies give agents common ground across your site and the broader web.

Implementing Real-Time Content Delivery for Agents

Why Do Agents Need Real-Time Content Updates?

Stale data leads to bad decisions, and agents make thousands of decisions per second.

An AI shopping agent checking inventory shouldn’t see yesterday’s stock levels. A price-comparison agent needs current pricing, not cached values from last week.

Implement webhooks or Server-Sent Events (SSE) so agents can subscribe to content changes. When your inventory updates, active agents get notified immediately rather than polling constantly.

How Should You Handle Content Caching Without Sacrificing Freshness?

Smart cache invalidation is the sweet spot.

Use ETags and conditional requests (If-None-Match headers) so agents only fetch content that’s actually changed. Implement cache headers that match your content’s volatility—5 minutes for stock prices, 24 hours for blog posts, 7 days for company history.

Consider edge caching with intelligent invalidation. When content changes in your headless CMS, trigger cache purges across your CDN so the next agent request gets fresh data.

A recent Ahrefs performance study showed that intelligent caching reduces agent bandwidth consumption by 71% while maintaining data freshness.

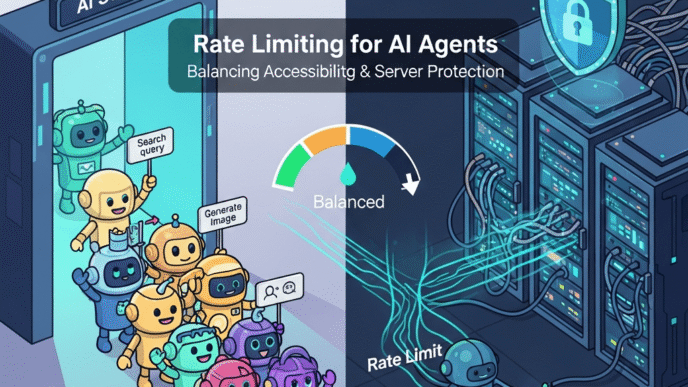

What About Pagination and Rate Limiting for Large Datasets?

Cursor-based pagination beats offset pagination for agent consumption.

Offset pagination (page 1, page 2, page 3) breaks when data changes between requests. Cursor-based pagination (continue from item X) remains stable even as your dataset shifts.

Implement reasonable rate limits but communicate them clearly in response headers (X-RateLimit-Remaining, X-RateLimit-Reset). Let agents plan their requests rather than hitting walls unexpectedly.

For bulk access needs, consider data export endpoints or streaming APIs that let agents efficiently consume large datasets without hammering your standard endpoints.

Metadata and Context for Enhanced Agent Understanding

How Much Metadata Should Accompany Each Content Piece?

More than you think, but structured intelligently.

Every content item should include: unique identifier, creation/modification timestamps, author/source information, canonical URL, content type, status (published/draft), version number, and relationships to other content.

Add domain-specific metadata: for products (SKU, brand, category, price history), for articles (word count, reading time, topics, sentiment), for media (dimensions, file size, encoding, accessibility descriptions).

This metadata doesn’t bloat your responses—it enables smarter agent decisions about whether and how to consume your content.

Should You Include Confidence Scores and Data Provenance?

Yes, especially for factual or data-driven content.

If you’re publishing statistics, include source citations, collection methodology, and confidence intervals. Agents can then make informed decisions about data reliability.

For AI-generated or aggregated content, include provenance information. Agents (and humans) deserve to know content origins.

Pro Tip: “Transparency builds trust. When agents know your data sources and limitations, they can use your content more appropriately and cite you more confidently in their outputs.” — Rand Fishkin, SEO and Marketing Expert

How Do Relationships Between Content Items Enhance Agent Navigation?

Content graphs enable discovery patterns impossible with isolated items.

When your API exposes that Article A references Product B, cites Study C, and relates to Topic D, you’ve created a navigable knowledge structure. Agents can traverse these relationships to build comprehensive understanding.

Implement bidirectional relationships. If Article A links to Product B, Product B should know about Article A’s reference. This enables “what references this item” queries that agents frequently need.

Performance Optimization for High-Volume Agent Access

What Infrastructure Considerations Support Heavy Agent Traffic?

Agents are predictable but relentless—optimize accordingly.

Implement aggressive caching at multiple layers (application, database, CDN). Most agent queries are reads, not writes, making caching incredibly effective.

Use connection pooling and persistent connections for agents making sequential requests. The overhead of connection establishment adds up quickly at scale.

Consider dedicated API infrastructure separate from your human-facing systems. Agent traffic patterns differ dramatically from human browsing—different scaling strategies apply.

How Should You Monitor and Analyze Agent API Usage?

Track metrics that matter for machine consumption, not human engagement.

Monitor: request volume by endpoint, response times (p50, p95, p99), error rates by status code, bandwidth consumption, cache hit rates, and query complexity (for GraphQL).

Segment analytics by agent type. Your own internal agents have different patterns than partner agents or public crawlers. Optimize for your priority use cases.

Set up alerting for anomalies—sudden traffic spikes, error rate increases, or unusual query patterns often indicate problems or abuse.

What About Cost Management for Agent-Driven API Usage?

APIs serving agents can get expensive fast without proper controls.

Implement tiered access with usage-based pricing for high-volume consumers. Free tiers work for experimentation, but commercial agents should pay for commercial access.

Use API gateways that provide built-in analytics, rate limiting, and billing integration. Tools like Kong, Apigee, or AWS API Gateway handle the heavy lifting.

Monitor query complexity for GraphQL APIs. An innocent-looking query can trigger database operations costing thousands of times more than simpler queries. Set complexity budgets and depth limits.

Testing and Validation for Agent-Friendly APIs

How Do You Ensure API Reliability for Autonomous Agents?

Comprehensive automated testing is non-negotiable.

Implement contract testing that validates your API responses match your schemas exactly. Use tools like Pact or Postman to create executable API contracts.

Run continuous integration tests that simulate agent workflows. Can they search your content? Filter by attributes? Fetch related items? Complete multi-step processes?

Test edge cases agents encounter: empty result sets, very large responses, concurrent requests, partial failures. Agents can’t “work around” problems the way humans can.

What Documentation Do Agents (and Their Developers) Need?

Comprehensive, machine-readable, and always current.

OpenAPI (Swagger) specifications for REST APIs. GraphQL’s introspection provides self-documentation, but supplement with usage examples and best practices.

Include sample requests and responses for every endpoint. Show common query patterns. Document error conditions and recovery strategies.

Provide SDKs or client libraries in popular languages when possible. Agents are built in Python, JavaScript, Java, Go—reduce their integration friction.

Should You Provide Sandbox Environments for Agent Testing?

Absolutely, and make them as production-like as possible.

Agent developers need safe spaces to experiment, test edge cases, and validate integrations before hitting your production systems.

Populate sandbox with realistic data at realistic volumes. Testing against three sample products tells you nothing about performance with three million products.

Implement the same rate limits, authentication, and validation rules as production. Surprises during production migration destroy developer trust.

Migration Strategies: Moving to API-First Content

How Do You Transition From Traditional CMS to API-First Architecture?

Gradually, with parallel systems during transition.

Start by selecting a content type with manageable complexity—maybe your blog posts or product catalog. Migrate that content to a headless system while maintaining your legacy CMS.

Build APIs for the migrated content. Update your website to consume those APIs instead of direct CMS rendering. Validate everything works correctly before expanding.

Repeat for additional content types until your legacy CMS serves only as a temporary reference, then sunset it completely.

What Content Needs Restructuring During Migration?

Probably everything, but some more dramatically than others.

Content with mixed presentation and data (HTML blobs full of inline styles and formatting) needs complete decomposition. Extract semantic meaning into structured fields.

Normalize inconsistent data. If product prices are stored as strings, numbers, and formatted currency in different places, pick one approach and convert everything.

Flatten deeply nested structures that make sense visually but create API nightmares. Reorganize for logical data relationships rather than page layout convenience.

How Long Does Meaningful API-First Transformation Take?

Six months to two years for most organizations, depending on content volume and complexity.

Contentful’s 2024 digital transformation report found the median timeline is 11 months from decision to full API-first operation for mid-size companies.

Don’t let perfection paralyze progress. Launch with 80% coverage, iterate based on real agent usage patterns, and continuously improve.

Integration With Agent-Accessible Architecture

Your API-first content strategy provides the data layer that agent-accessible architecture needs to function effectively.

Think of it this way: architecture defines HOW agents navigate your digital presence. API-first content defines WHAT they access when they get there.

You can build perfect agent-accessible architecture with semantic HTML and clear navigation, but if your content isn’t exposed through clean APIs, agents still can’t accomplish meaningful tasks.

Conversely, world-class APIs delivering pristine content data mean nothing if agents can’t discover them through poor architecture.

The two strategies are inseparable halves of agent enablement. Agent-accessible architecture gets them to your door. API-first content strategy gives them something valuable when they arrive.

Organizations succeeding with AI agents implement both simultaneously. Your content API becomes the primary interface for agent interactions with your digital ecosystem.

When you structure content with API content architecture principles, you’re not just organizing data—you’re enabling the autonomous behaviors that define modern agentic systems.

Common Pitfalls in API-First Content Implementation

Are You Over-Engineering Your Content Models?

Complexity for complexity’s sake kills adoption and slows delivery.

The perfect content model that takes nine months to build is worse than a good model you can launch in six weeks and iterate. Start simple, add complexity only when clear use cases demand it.

Every additional field, relationship, or validation rule adds cognitive load for content creators and maintenance burden for developers. Is the juice worth the squeeze?

Why Does Inconsistent Field Naming Create Agent Chaos?

Because agents can’t guess whether you meant “img_url,” “imageURL,” “image_link,” or “picture_src.”

Establish naming conventions early and enforce them religiously. Use camelCase or snake_case consistently. Pick singular or plural for arrays and stick with it.

Document your conventions and build linting tools that catch violations before they hit production. Consistency is the foundation of machine readability.

Are You Forgetting Backward Compatibility When Evolving APIs?

Breaking changes break agent trust and functionality.

When you need to modify an API, version it. Keep old versions running until agents migrate. Provide clear deprecation timelines measured in months, not weeks.

Additive changes (new optional fields) are safe. Removal or modification of existing fields requires new API versions.

Pro Tip: “Treat your API as a product with customers who depend on it. Breaking changes without migration paths is like randomly reformatting your users’ hard drives.” — Kin Lane, API Evangelist

Future-Proofing Your Content APIs

How Will Multimodal AI Change Content API Requirements?

Agents will soon consume text, images, audio, and video simultaneously for holistic understanding.

Your APIs should deliver comprehensive asset information: not just image URLs but dimensions, formats, accessibility descriptions, embedded metadata, and relationships to textual content.

For video content, include transcripts, scene descriptions, timestamps for key moments, and speaker identification. Multimodal agents need this contextual data for proper understanding.

What About Semantic APIs and Knowledge Graphs?

The future isn’t just delivering content—it’s delivering meaning.

Knowledge graph APIs expose entities and their relationships in ways traditional content APIs don’t. Instead of “article containing mentions of Tesla,” you’d deliver “Article references Entity:Tesla(type:Company), Entity:Elon_Musk(type:Person), Entity:Electric_Vehicles(type:ProductCategory).”

Technologies like GraphQL are evolving toward these semantic patterns. SPARQL and triple stores enable even deeper graph queries.

Early adopters of semantic APIs are seeing agent comprehension rates improve by 40-60% compared to traditional text-based APIs.

Should You Prepare for Agent-to-Agent Content Negotiation?

Yes—agents will increasingly broker data access for other agents.

Your APIs might serve not just terminal agents completing tasks, but intermediary agents that aggregate, transform, and redistribute your content to specialized downstream agents.

Implement proper attribution and usage tracking. When Agent A queries your API and provides data to Agent B who serves User C, your analytics should capture this chain.

Consider licensing and usage terms that account for agent intermediaries while protecting your intellectual property.

FAQ: API-First Content Strategy

What’s the main difference between headless CMS and traditional CMS for agents?

Traditional CMS mixes content with presentation—your blog post exists as HTML within theme templates. Headless CMS stores pure structured data accessible via APIs. When an AI agent queries a traditional CMS, it must parse HTML to extract meaning. With headless, the agent gets clean JSON or XML with explicit data structures. This reduces errors by 80-90% and enables agents to consume content at 10-100x higher rates since they skip rendering entirely.

How do I convince stakeholders to invest in API-first content transformation?

Focus on ROI from content reuse and future-proofing. Content created once in an API-first system serves websites, apps, voice assistants, chatbots, and AI agents simultaneously—eliminating duplicate effort. Show competitors already serving agents effectively. Quantify time currently wasted reformatting content for different channels. Emphasize that this isn’t optional innovation—it’s infrastructure for the AI-driven web that’s already here.

Can I implement API-first strategy without replacing my entire CMS?

Yes, through gradual migration or hybrid approaches. Many organizations keep WordPress for content creation but export to headless systems like Contentful through synchronization plugins. Others build custom APIs on top of existing databases. Start with high-value content types (products, services) where agent consumption provides immediate benefits. Expand systematically rather than attempting overnight transformation.

What’s the typical cost difference between traditional and API-first content systems?

Upfront costs run 40-70% higher for API-first due to architecture design, headless platform licensing, and migration effort. However, operational costs drop by 30-50% within 18 months through content reuse, elimination of duplicate systems, and reduced maintenance. Organizations with 10,000+ content items typically see positive ROI within 12-24 months. Smaller operations might take 24-36 months but still benefit from future-proofing.

How do I handle content that’s inherently visual and hard to structure?

Decompose visual elements into describable components. For infographics, extract: headline (text), data points (structured numbers), supporting text (paragraphs), visual style (metadata), source image (asset reference). Store the composed visual as an asset while making individual components API-accessible. Agents can then request the specific data they need or reference the complete visual. The key is separating content from presentation—even for visual content.

What authentication method works best for AI agent API access?

OAuth 2.0 client credentials flow for most commercial agents, with API keys for simpler use cases. Avoid authentication methods requiring human intervention (CAPTCHA, multi-factor prompts). Implement long-lived tokens with explicit expiration dates so agents can plan renewals. For high-security scenarios, mutual TLS provides strong authentication without interactive steps. Always provide clear, machine-readable error responses when authentication fails so agents can retry correctly.

Final Thoughts

API-first content strategy isn’t about abandoning humans—it’s about serving everyone better.

When your content exists as clean, structured data accessible through well-designed APIs, you can render it beautifully for humans while simultaneously serving it efficiently to agents. Single source of truth, infinite presentations.

The organizations that will thrive over the next decade aren’t those with the most content—they’re the ones whose content is most consumable by the autonomous systems reshaping how information flows through the digital world.

Start small. Pick one content type. Structure it properly. Build an API. Let an agent consume it successfully. Then expand systematically.

Your content has value. API-first content strategy ensures that value reaches every consumer—human or machine—who needs it. The agents are coming. The question is whether your content will be ready when they arrive.

Citations

Gartner Press Release – Digital Experience Platforms and Composable Architecture

SEMrush Blog – Content Marketing Statistics 2024

Ahrefs Blog – API Best Practices Guide

Statista – Data Quality Statistics

Ahrefs Blog – Website Performance Optimization

Contentful Resources – Digital Transformation Report 2024

Karen McGrane – Content Strategy Insights

Phil Sturgeon – API Development Best Practices