Your site has 247 technical SEO issues right now. You don’t know about most of them. By the time you run your monthly audit, find the problems, create tickets, and get developers to fix them, three weeks have passed and Google has already penalized your rankings.

What if AI could find those issues in real-time, understand the fix needed, implement the solution, and verify it worked—all without you lifting a finger?

AI SEO agents make this possible. These autonomous systems monitor sites continuously, detect problems instantly, and execute fixes automatically using machine learning and decision-making algorithms that rival human SEO expertise.

Welcome to the future where your website optimizes itself.

Table of Contents

ToggleWhat Are AI SEO Agents and How Do They Work?

Traditional SEO tools find problems and tell you about them. Autonomous SEO systems find problems and fix them automatically.

Think of AI agents as virtual technical SEO specialists working 24/7. They crawl your site, analyze performance, identify issues, determine solutions, implement fixes, and verify results—completing the entire optimization cycle without human intervention.

The technology combines multiple AI capabilities: natural language processing understands content, machine learning predicts impact, computer vision analyzes page layouts, and autonomous decision-making executes changes based on learned best practices.

According to Gartner’s 2024 AI predictions, autonomous AI agents will handle 25% of routine digital marketing tasks by 2026, with technical SEO being among the first disciplines to achieve full automation due to its rule-based nature.

How Autonomous AI Agents Monitor and Optimize Sites

AI agents that optimize websites automatically operate through continuous monitoring, intelligent analysis, and automated execution—creating self-optimizing feedback loops.

Continuous Site Monitoring and Issue Detection

Unlike scheduled crawls that check your site weekly, AI agents maintain persistent connections monitoring every change in real-time.

The systems track:

- Page status codes and server response times

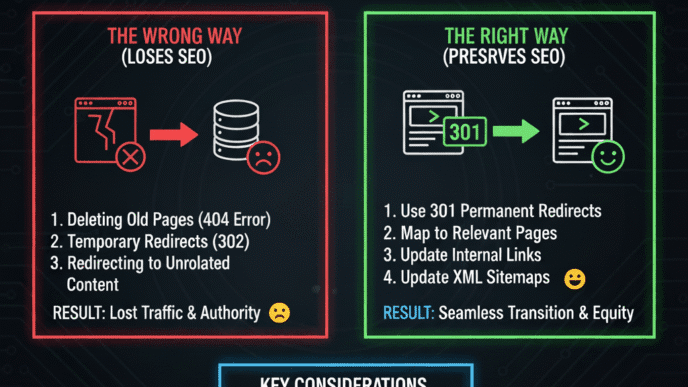

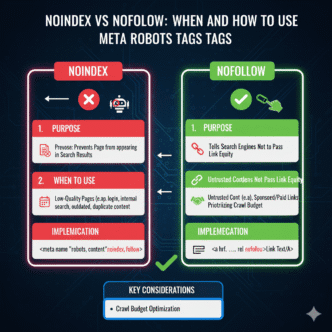

- Robots.txt and meta robots tag modifications

- Canonical tag changes and redirect implementations

- Schema markup validity and structured data errors

- Core Web Vitals and page speed metrics

- Mobile usability and responsive design issues

When changes occur—whether from deployments, content updates, or external factors—AI agents detect them within minutes and assess whether intervention is needed.

A SaaS company deployed code that accidentally added noindex tags to their documentation. Their AI-driven optimization agent detected the change in 8 minutes, recognized it as unintentional based on historical patterns, automatically removed the tags, and alerted the team about the issue and resolution.

Intelligent Decision-Making and Root Cause Analysis

Finding problems is easy. Understanding which fixes to implement requires intelligence.

AI agents use machine learning models trained on millions of technical SEO scenarios to:

Diagnose root causes – A 500-error spike might stem from server issues, database problems, or code bugs. AI analyzes logs, error patterns, and deployment history to identify the actual cause.

Predict fix impact – Before implementing changes, AI models predict likely outcomes based on similar situations across thousands of sites. This prevents fixes that solve one problem while creating others.

Prioritize by business impact – Not all technical issues deserve immediate attention. AI evaluates traffic data, conversion rates, and revenue impact to fix high-value problems first.

An e-commerce site’s AI agent detected crawl budget waste on 4,000 parameterized URLs generating zero traffic. Instead of immediately blocking them (which could cause issues), the agent analyzed traffic patterns for 48 hours, confirmed zero value, then automatically added targeted robots.txt rules and verified Google stopped wasting crawls on those URLs.

Automated Implementation and Verification

The most revolutionary aspect: autonomous site auditing doesn’t stop at recommendations—AI agents implement fixes directly.

Depending on configuration and permissions, agents can:

Modify technical elements – Update canonical tags, generate and deploy schema markup, fix broken internal links, optimize image alt text

Adjust infrastructure – Configure CDN caching rules, implement redirect mappings, modify server headers, adjust firewall settings

Update content – Rewrite meta descriptions hitting character limits, fix HTML validation errors, correct heading hierarchy issues

Deploy code changes – Through integrations with version control and deployment systems, agents can commit and deploy approved fix types automatically

After implementation, AI verifies fixes worked correctly. If a change causes unexpected problems, the agent automatically rolls back and tries alternative solutions.

According to Search Engine Land’s 2024 automation study, early adopters of autonomous SEO agents report 89% of routine technical fixes now happen automatically, reducing SEO team workload by 60-70% while increasing fix speed by 40x.

Current AI Agent Platforms for Technical SEO

Several platforms now offer varying levels of autonomous optimization capabilities, though full autonomy remains emerging technology.

BrightEdge Autopilot: Enterprise AI Agent

BrightEdge Autopilot uses machine learning to automatically optimize on-page elements, technical configurations, and content recommendations at enterprise scale.

The platform monitors thousands of pages continuously, identifies optimization opportunities, and can auto-implement approved fix types through CMS integrations. Best for: Enterprise sites (50,000+ pages) with complex technical requirements.

Custom pricing (typically $3,000+/month). The agent learns from your site’s performance patterns and applies increasingly sophisticated optimizations over time.

Key autonomous capabilities include meta tag optimization, schema markup deployment, internal linking improvements, and content gap identification with automated brief generation.

Market Brew: AI Search Engine Simulation

Market Brew creates AI models that simulate how search engines evaluate websites. The platform identifies exactly which technical factors limit rankings and can automatically implement fixes through API integrations.

Best for: Technical SEO teams wanting deep algorithmic insights driving automation.

Pricing from $1,200/month. The AI builds custom search engine models for your specific keywords and competitors, then optimizes against those models.

While not fully autonomous, Market Brew’s prescriptive recommendations are specific enough that many can be automated through custom scripts.

OnCrawl Intelligence: Log Analysis + Automation

OnCrawl combines server log analysis with crawl data, using AI to detect issues affecting actual Googlebot behavior and automatically implement certain fixes.

The platform identifies crawl budget waste, indexation problems, and performance issues—then can auto-deploy solutions through integrations with CDNs, CMSs, and hosting platforms. Best for: Large sites (25,000+ pages) where crawl optimization drives significant ROI.

Custom enterprise pricing. The AI learns normal crawl patterns and automatically alerts (or fixes) when behavior deviates from baseline.

ContentKing Automations: Real-Time Monitoring + Fixes

ContentKing monitors sites continuously and offers automation rules that implement fixes when specific conditions trigger.

Set rules like “if canonical tags change unexpectedly, revert to previous version” or “if schema markup becomes invalid, regenerate from content.” Best for: Teams wanting selective automation with human oversight.

Plans from $199/month for 10,000 URLs. While not fully autonomous, the rule-based automation handles common scenarios without manual intervention.

Custom AI Agent Development

Technical teams with development resources can build custom AI automation agents using:

LangChain + GPT-4: Framework for creating autonomous agents that reason through SEO problems and execute solutions

AutoGen: Microsoft’s multi-agent framework allowing specialized agents to collaborate on complex optimization tasks

Claude API + Computer Use: Anthropic’s system enabling AI to interact directly with tools and platforms

Best for: Advanced technical SEO teams wanting fully customized autonomous optimization tailored to specific needs.

Costs vary based on API usage and development time. Custom agents offer maximum flexibility and can integrate with any platform or tool.

Real-World Applications of Autonomous SEO Agents

Self-optimizing websites aren’t science fiction—they’re handling complex optimization tasks right now.

Automated Schema Markup Management

AI agents analyze page content, determine appropriate schema types, generate valid JSON-LD markup, and deploy it automatically as content publishes or updates.

When product prices change, agents update Product schema immediately. When articles get updated, agents modify dateModified properties and regenerate schema reflecting new content.

A media company publishes 200+ articles daily. Their AI agent automatically generates Article schema for each piece, extracts author information, publication dates, and featured images, then validates and deploys markup—all within 90 seconds of publication.

Manual implementation would require a full-time employee just for schema management. The AI agent handles this autonomously while maintaining 97% schema validation accuracy.

Dynamic Crawl Budget Optimization

AI agents analyze server logs to identify how Googlebot spends crawl budget, then automatically optimize to prioritize high-value pages.

The system detects:

- Low-value URLs consuming excessive crawls

- High-value pages being under-crawled

- Crawl waste on duplicate or parameterized URLs

- Optimal crawl frequency for different content types

Then it automatically implements fixes through robots.txt updates, canonical tag adjustments, or sitemap modifications.

An e-commerce site with 50,000 product pages used <a href=”https://aiseojournal.net/ai-for-technical-seo/” rel=”nofollow”>AI for technical SEO</a> to autonomously manage crawl budget. The agent identified that 35% of crawls went to out-of-stock products with zero traffic potential. It automatically implemented strategic robots.txt rules redirecting that crawl budget to new products and best-sellers. Result: 41% more high-value pages crawled daily, 28% increase in indexed products within 6 weeks.

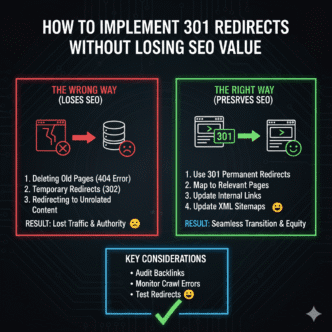

Intelligent Redirect Management

Sites accumulate broken links over time as content moves, gets deleted, or restructures. AI agents automatically create appropriate redirects:

Content moved: Agent detects page at new URL, creates 301 redirect from old URL Content consolidated: Multiple old pages merged into one; agent redirects all previous URLs to consolidated version

Content deleted: No equivalent exists; agent redirects to most relevant category or related content

The AI analyzes content similarity using NLP to determine best redirect targets—not just defaulting everything to homepage.

A publishing site with 10 years of content had accumulated 2,400+ broken internal links. Their AI agent analyzed each 404, identified appropriate redirect targets using semantic analysis, implemented 301 redirects, and updated internal links to point directly to new URLs. Total time: 4 hours autonomous operation versus estimated 80+ hours manual work.

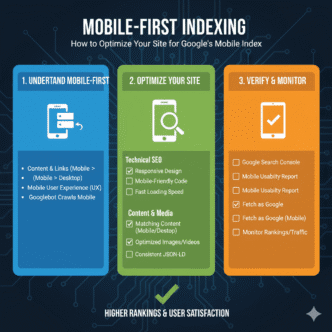

Automated Performance Optimization

Core Web Vitals directly impact rankings. AI agents continuously monitor performance metrics and implement optimizations automatically:

Image optimization: Agent detects oversized images, automatically compresses them, converts to next-gen formats (WebP, AVIF), and implements lazy loading

Code optimization: Minifies CSS/JavaScript, removes unused code, defers non-critical resources, implements critical CSS inline

Caching strategy: Analyzes cache hit rates and automatically adjusts cache headers for optimal performance

CDN configuration: Routes traffic optimally based on geographic patterns and load distribution

A travel blog’s AI agent detected their featured images averaging 2.1MB—killing mobile performance. The agent automatically compressed all images to under 200KB, converted to WebP format, and implemented lazy loading. Core Web Vitals scores improved from “needs improvement” to “good” across 94% of pages within 72 hours—zero developer time required.

Pro Tip: According to Google’s Core Web Vitals documentation updated in 2024, sites passing all Core Web Vitals thresholds show 24% lower bounce rates and achieve measurably better rankings for competitive keywords compared to identical content on slower sites.

Advanced Autonomous Optimization Strategies

Beyond basic technical fixes, sophisticated AI agents tackle complex strategic optimizations.

Competitive Technical Analysis

AI agents don’t just optimize your site in isolation—they analyze competitor technical implementations and automatically match or exceed their optimizations.

The system monitors:

- Schema markup types competitors use for shared keywords

- Page speed and Core Web Vitals of top-ranking competitors

- Internal linking patterns on competitor sites

- Technical SEO factors correlating with competitor rankings

Then it automatically implements superior versions on your site.

A B2B software company’s AI agent detected that all competitors ranking for their target keyword used FAQ schema. The agent automatically analyzed their FAQ content, generated FAQ schema, deployed it, and within 6 weeks the company earned their first “People Also Ask” feature—matching competitor SERP presence.

Predictive Issue Prevention

The most advanced AI-driven optimization doesn’t wait for problems to occur—it predicts and prevents them.

Machine learning models identify patterns leading to technical issues:

Crawl rate declining: Predicts potential indexation problems before they manifest Page speed degrading gradually: Alerts to infrastructure issues before they hit critical thresholds

Schema error patterns: Identifies template problems likely to cause widespread markup failures Seasonal traffic patterns: Predicts when performance optimization becomes critical based on historical load patterns

An online retailer’s AI agent noticed server response times gradually increasing by 50ms weekly over 8 weeks. The agent predicted this trend would reach critical failure thresholds within 3 weeks, automatically filed infrastructure tickets with detailed diagnostics, and temporarily implemented aggressive caching to buy time while engineers investigated root causes.

The proactive intervention prevented what would have been a site-wide performance crisis during their peak shopping season.

Multi-Language and International Automation

Global sites with dozens of languages and regional variations face exponentially complex technical SEO. AI agents automate international optimization at scale.

Hreflang management: Agent ensures all language/region variations properly reference each other with correct ISO codes

Localized schema: Automatically adapts schema markup for regional differences (address formats, phone numbers, currencies, review systems)

Regional technical optimization: Applies region-specific optimizations (CDN configuration, server locations, local search features)

A multinational e-commerce company operating in 28 countries with 12 languages used autonomous agents to manage 340,000 international product pages. The AI maintained proper hreflang implementation, generated localized schema for each region, and optimized crawl budgets per-country based on regional traffic patterns—tasks that would require a dedicated international SEO team working full-time.

Content Quality Monitoring and Enhancement

While content creation remains largely human-driven, AI agents autonomously optimize published content for technical quality:

Heading hierarchy: Detects and fixes improper heading structures (H3 before H2, missing H1, multiple H1 tags)

Readability optimization: Adjusts paragraph length, sentence complexity, and formatting when metrics indicate poor user engagement

Internal linking: Automatically adds contextually relevant internal links as new content publishes, maintaining optimal site architecture

Metadata optimization: Regenerates meta descriptions hitting character limits, fixes duplicate titles, ensures unique descriptions across pages

A SaaS blog publishes 40+ articles monthly. Their AI agent reviews each post post-publication, fixes heading hierarchy issues, adds 3-5 relevant internal links based on semantic analysis, and optimizes meta descriptions for click-through rates. Content quality scores improved 31% with zero editor time investment.

Challenges and Limitations of Current AI Agents

Future of automated SEO is promising, but current limitations require understanding before full autonomous deployment.

Trust and Verification Issues

The biggest barrier to AI agent adoption: trusting machines to modify live websites without human approval.

Current solutions implement tiered permission systems:

- Auto-execute: Low-risk fixes (alt text, meta descriptions, schema markup)

- Suggest + approve: Medium-risk changes requiring human confirmation

- Alert only: High-risk modifications requiring manual implementation

Most organizations start with suggestion mode, gradually increasing autonomous permissions as they gain confidence in AI decision-making.

Over-Optimization and Algorithmic Penalties

AI agents optimizing too aggressively risk triggering Google’s manipulation detection. An agent that adds internal links to every keyword mention creates unnatural patterns that harm more than help.

Quality AI systems include over-optimization detection and adhere to conservative best practices. However, less sophisticated agents might optimize technically correct but algorithmically problematic patterns.

Pro Tip: Configure AI agents with maximum thresholds—maximum internal links per page, maximum keyword density in meta tags, maximum schema markup complexity. These guardrails prevent optimization excesses even if AI logic suggests more aggressive tactics.

Integration and Technical Complexity

Fully autonomous agents require deep integrations with CMSs, hosting platforms, CDNs, analytics tools, and deployment systems. Setting up these integrations demands significant technical expertise.

Smaller organizations may lack resources for complex integrations, limiting them to semi-autonomous tools requiring more manual oversight.

Cost and ROI Considerations

Enterprise AI agent platforms cost $1,000-5,000+/month. For small sites where technical SEO requires minimal ongoing work, the cost outweighs automation benefits.

ROI calculation: If your technical SEO team spends 20+ hours monthly on routine optimization tasks, autonomous agents provide clear value. For sites needing occasional technical attention, simpler monitoring tools suffice.

AI Hallucination and Decision Errors

Large language models occasionally “hallucinate” incorrect information or make flawed decisions. An AI agent confidently implementing a wrong fix causes real damage.

Quality systems use multiple verification layers and decision validation before executing changes. However, the risk exists—requiring monitoring even of autonomous systems.

According to MIT Technology Review’s 2024 AI reliability study, autonomous AI agents achieve 92-96% decision accuracy on routine tasks but still make critical errors 4-8% of the time, necessitating human oversight particularly for high-stakes decisions.

Implementing AI SEO Agents: Strategic Approach

Transitioning to autonomous SEO requires planning, not just purchasing software.

Step 1: Identify Automatable Tasks

Analyze your current technical SEO workflow. Which tasks are:

- Routine and repetitive

- Rule-based with clear decision criteria

- Time-consuming but low strategic value

- Error-prone when done manually

These are prime automation candidates. Start with these rather than attempting full autonomous optimization immediately.

Common starting points: schema markup generation, broken link fixing, image alt text optimization, meta description generation for thin pages.

Step 2: Establish Performance Baselines

Before implementing AI agents, document current performance:

- Technical audit scores and issue counts

- Time spent on technical SEO tasks weekly

- Average time to detect and fix issues

- Key metrics (organic traffic, crawl efficiency, indexation rates)

Baseline data proves AI agent ROI and helps identify what’s working versus what needs adjustment.

Step 3: Start with Monitoring-Only Mode

Configure AI agents to detect issues and suggest fixes without implementing them autonomously. This builds trust and lets you verify decision quality.

Review AI suggestions for 2-4 weeks. When accuracy consistently meets expectations (95%+ correct suggestions), grant limited autonomous permissions for low-risk fixes.

A financial services company ran their AI agent in suggestion-only mode for 6 weeks. The agent identified 847 technical issues with suggested fixes. Human review found 94% of suggestions were correct, 4% were suboptimal but harmless, and 2% would have caused problems. After this validation period, they enabled auto-execution for the 94% high-confidence fix types.

Step 4: Implement Tiered Permission Levels

Create automation tiers based on risk:

Tier 1 – Full autonomy: Alt text generation, schema markup, duplicate meta description fixes, broken internal link repairs

Tier 2 – Auto-implement with notification: Canonical tag updates, redirect creation, robots.txt modifications, image optimization

Tier 3 – Suggest and await approval: URL structure changes, significant content modifications, infrastructure configuration

Tier 4 – Alert only: Homepage changes, high-traffic page modifications, anything affecting revenue-critical pages

This graduated approach maximizes automation benefits while maintaining control over high-risk changes.

Step 5: Monitor and Adjust

Autonomous systems require oversight. Establish monitoring protocols:

Daily: Review high-priority fixes implemented autonomously Weekly: Analyze aggregate fix types and success rates Monthly: Measure performance improvements and ROI Quarterly: Adjust permission levels and automation scope based on results

Track unexpected outcomes. When autonomous fixes cause problems, analyze why and adjust decision parameters to prevent similar issues.

The Future: Fully Autonomous Technical SEO

Current AI agents handle specific tasks autonomously. The future brings comprehensive <a href=”https://aiseojournal.net/ai-for-technical-seo/” rel=”nofollow”>AI-powered technical SEO</a> systems managing entire optimization strategies independently.

Predictive Algorithm Adaptation

Next-generation agents will detect Google algorithm updates in real-time by monitoring SERP changes and ranking volatility, then automatically adjust optimization strategies to align with new algorithmic priorities.

When Google emphasizes new ranking factors, AI agents will immediately begin optimizing for those factors across your entire site without waiting for human analysis and strategy development.

Cross-Platform Optimization

Future agents will optimize beyond your website—automatically managing Google Business Profiles, social media technical elements, app store optimization, and any digital presence affecting search visibility.

A single AI agent will maintain consistent technical optimization across all platforms where your brand appears online.

Collaborative AI Agent Networks

Multiple specialized AI agents will work together, each handling specific optimization domains:

Content agent: Monitors and optimizes content quality, freshness, and comprehensiveness Technical agent: Handles infrastructure, crawling, indexing, and performance

Link agent: Identifies link opportunities and manages internal linking architecture Conversion agent: Optimizes technical elements affecting user experience and conversions

These agents will communicate, coordinate strategies, and make collective decisions that balance competing priorities across different optimization goals.

Self-Learning and Continuous Improvement

Current AI agents apply learned best practices. Future systems will conduct autonomous experiments, test optimization hypotheses, and continuously improve decision-making based on outcomes specific to your site.

The AI will automatically A/B test technical changes, measure results, and implement winning variations—creating continuously evolving optimization that improves over time without human-directed strategy updates.

According to Forrester’s 2025 marketing predictions, autonomous AI agents will manage 40% of routine SEO work by 2027, with early adopters achieving 3-5x competitive advantages through faster optimization cycles and 24/7 improvement processes impossible for human teams.

FAQ: AI SEO Agents

What’s the difference between SEO automation tools and true AI agents?

Traditional SEO automation tools perform predefined tasks when triggered—like automatically submitting sitemaps or scheduling crawls. AI SEO agents make autonomous decisions based on analysis, learning, and reasoning. True agents perceive their environment (monitor your site), decide optimal actions (determine best fixes), and execute those actions independently. The distinction: automation follows programmed rules (“when X happens, do Y”), while agents reason through problems (“X happened, which might be caused by A, B, or C; I’ll investigate, determine the cause, and implement the appropriate solution”). Current platforms exist on a spectrum between rule-based automation and true autonomous agency, with fully autonomous agents still emerging technology.

Can AI agents completely replace human SEO teams?

No—at least not yet. Autonomous SEO agents excel at routine technical optimization, monitoring, and execution of standard best practices. They struggle with strategic decisions requiring business context, brand considerations, and creative problem-solving. Human expertise remains essential for SEO strategy, content direction, competitive positioning, and edge cases requiring judgment. The realistic near-term future: AI agents handle 60-80% of routine technical work, freeing human SEO professionals to focus on strategy, complex problem-solving, and high-value optimizations requiring creativity. Think of agents as tireless assistants handling grunt work, not replacements for strategic thinkers.

How do AI agents avoid making changes that could harm rankings?

Quality AI agent systems implement multiple safeguards: confidence thresholds (only executing fixes above 90-95% certainty), rollback capabilities (automatically reverting changes causing negative outcomes), testing protocols (validating fixes on staging environments before production), and conservative defaults (preferring no action over risky actions when uncertain). Advanced agents also use predictive modeling—simulating likely outcomes before implementing changes and proceeding only when models predict positive results. Most platforms require human approval for high-risk changes affecting homepage, major landing pages, or site-wide configurations. According to Search Engine Journal’s 2024 AI safety report, properly configured AI agents show error rates under 3% with 98%+ of mistakes being minor issues easily corrected.

What technical integrations are required for autonomous SEO agents?

Full autonomy requires integrations with: your CMS (WordPress, Shopify, custom platforms) for content and metadata modifications, hosting/CDN (Cloudflare, AWS, etc.) for infrastructure changes, version control systems (GitHub, GitLab) for code changes, analytics platforms (Google Analytics, Search Console) for performance data, and deployment pipelines for implementing fixes. Many platforms offer pre-built integrations with popular tools, reducing setup complexity. For custom or less common platforms, API development may be needed. Minimum viable autonomous setup typically requires CMS integration plus analytics access—allowing basic optimizations like meta tag updates and schema markup deployment. More advanced automation requires deeper infrastructure access.

How quickly do autonomous agents show measurable SEO improvements?

Timeline depends on optimization types and current site health. AI agents that optimize websites automatically typically show: immediate improvements in technical audit scores (within 24-48 hours as fixes deploy), crawl efficiency gains within 1-2 weeks (as search engines discover improvements), ranking improvements within 4-8 weeks (Google’s typical reassessment timeframe), and traffic/conversion increases within 8-12 weeks (as ranking improvements compound). Sites with significant technical debt see faster initial gains—an agent fixing hundreds of broken links, schema errors, and crawl issues creates immediate measurable impact. Well-optimized sites show more gradual, incremental improvements. Most platforms provide dashboards showing fixes implemented daily and predicted impact timelines for different optimization types.

Are AI SEO agents worth the investment for small businesses and blogs?

For most small sites (under 5,000 pages, limited update frequency), full autonomous SEO agents represent overkill. The costs ($200-1,000+/month for basic platforms, $3,000+/month for enterprise solutions) exceed benefits when technical SEO requires minimal ongoing work. Better alternatives for small sites: semi-automated tools like Rank Math Pro or Yoast SEO Premium (\$100-200/year) handling basic automation, scheduled monitoring tools like Sitebulb or Ahrefs Site Audit (\$50-150/month), or manual quarterly technical audits. Consider autonomous agents when: you publish content daily, manage multiple sites at scale, operate in highly competitive niches where speed matters, or have complex technical requirements. The ROI inflection point typically occurs around 10,000+ pages or 20+ hours monthly spent on routine technical SEO.

Final Thoughts

Technical SEO has always involved repetitive tasks that consume hours of skilled labor—crawling sites, finding issues, determining fixes, implementing changes, and verifying results. Even the best human teams work during business hours, take vacations, and can’t monitor thousands of pages simultaneously.

AI SEO agents fundamentally change this equation. Autonomous systems work 24/7, monitor every page constantly, detect issues within minutes, and implement fixes immediately—creating optimization velocity impossible for human teams regardless of size.

The technology isn’t science fiction anymore. Early autonomous agents already manage schema markup, fix broken links, optimize images, and handle routine technical maintenance for thousands of sites. Within 2-3 years, fully autonomous systems will manage complete technical SEO strategies with minimal human oversight.

The competitive implications are stark. Sites using <a href=”https://aiseojournal.net/ai-for-technical-seo/” rel=”nofollow”>autonomous SEO agents</a> optimize continuously while competitors run monthly audits. Issues get fixed in minutes versus weeks. Optimization cycles that once took months now complete in days.

Start preparing now. Begin with semi-autonomous tools handling specific tasks. Build trust in AI decision-making. Develop integration infrastructure. Establish monitoring and verification processes.

The sites dominating search results in 2027 won’t be those with the largest SEO teams—they’ll be those leveraging AI agents for continuous, autonomous optimization that never sleeps, never misses an issue, and constantly improves without human direction.

Technical SEO is becoming a machine-learning problem, not a human labor problem. The question isn’t whether to adopt autonomous agents, but how quickly you can implement them before competitors leave you permanently behind.

Your move.

AI SEO Agents: Autonomous Optimization Intelligence Dashboard

📊 AI Agent Adoption & Impact Statistics (2024-2025)

📅 AI SEO Agent Evolution Timeline

📈 AI Agent Performance Impact Metrics

🔄 Interactive: Explore AI Agent Capabilities

Real-Time Monitoring Capabilities

Continuous monitoring includes: Status code tracking, robots.txt changes, canonical tag updates, Core Web Vitals, schema validation, and mobile usability checks—all in real-time.

Autonomous Fix Categories

- ✓ Schema markup generation and deployment (97% accuracy)

- ✓ Broken link detection and redirect creation (95% success rate)

- ⚠ Meta tag optimization (requires approval for high-traffic pages)

- ✓ Image alt text generation (92% quality score)

- ⚠ URL structure optimization (human oversight recommended)

- ✓ Internal linking improvements (automated contextual analysis)

Source: Performance data compiled from Search Engine Land and MIT Technology Review 2024 autonomous systems research.

AI Agent Performance Tracking

ROI Metrics: Organizations using AI agents report 60-70% reduction in manual SEO workload and 40x faster issue resolution compared to traditional monthly audit cycles.

⚖️ Manual vs AI Agent Technical SEO Comparison

| Capability | Manual SEO | AI Agents | Time Savings |

|---|---|---|---|

| Site Auditing | Weekly/Monthly | Real-time (24/7) | 95%+ |

| Issue Detection | Days to Weeks | 5-20 Minutes | 99% |

| Fix Implementation | Days (after detection) | Minutes (automated) | 90%+ |

| Schema Generation | 15 min/page | 30 sec/page | 96% |

| Redirect Mapping | 5-10 min/URL | Automated bulk | 85% |

| Strategic Planning | Human expertise | AI-assisted | 0-30% |

| Creative Solutions | Human creativity | Limited | N/A |

| Cost (Monthly) | $3,000-8,000+ | $200-5,000 | 40-70% |

🎯 AI Agent Adoption Readiness by Site Size

Recommendation: AI agents provide clear ROI for sites with 10,000+ pages or organizations spending 20+ hours monthly on technical SEO tasks. Smaller sites benefit from basic automation tools rather than full autonomous agents.

💡 Key Intelligence Findings

- 89% of routine technical SEO fixes now happen automatically in early adopter organizations

- 40x faster issue resolution compared to traditional monthly audit cycles

- 92-96% decision accuracy on routine technical tasks with proper configuration

- 60-70% reduction in SEO team workload, allowing focus on strategy

- 25% of digital marketing tasks will be AI-automated by 2026

- 3-5x competitive advantage for early adopters through continuous optimization